Storage for Kubernetes — Not a Problem, but an Opportunity

Last week’s KubeCon was special for everyone who has been working on OpenEBS and any related software for the last few years. By introducing OpenEBS to the CNCF and being embraced by various speakers at KubeCon, we seem to have broken through into the consciousness of thousands of Kubernetes users — all of whom at one point seemed to be queuing up to chat with us (or to grab a sticker of the Mule visiting Sagra Familia).

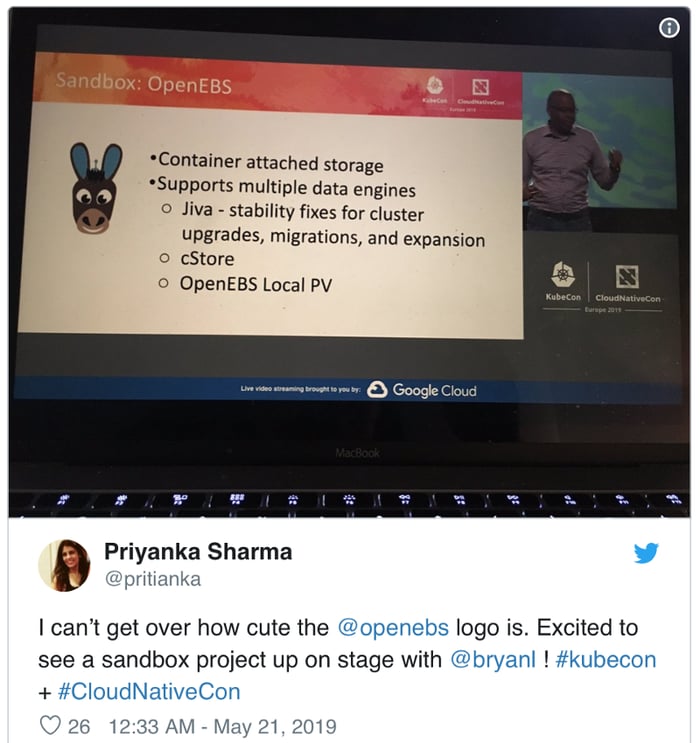

Tweets like this one (thank you Priyanka!) led to many great conversations around our booth.

https://twitter.com/pritianka/status/1130738477853581312

Many of our conversations were with existing OpenEBS users who come meet us in person and perhaps learn about a variety of topics including 0.9, the recently added support for LocalPV, the forthcoming high performance storage engine, on premise deployments of MayaOnline, among others. There was a decent amount of buzz about KubeMove as well — more on that below.

I’d like to give a special thanks to the engineers who stopped by from various car companies and reported that OpenEBS is now a preferred solution under a number of Kafka pipelines and even in the on-line configurator for a public facing web site. Not all states of this configurator (where you design your own car) are being handled by Kubernetes, but a portion is. Now they want to put all of it on Kubernetes itself as opposed to being on external systems only connected via API or otherwise. As their usage points out, APIs check the box for connectivity; however, these and countless other users of Container Attached Storage from OpenEBS and our proprietary friends show that there is something more going on here. These users are not stopping at the API level, whether it be CSI at the storage layer or via Kafka or some other layer of connectivity at the DB and data layers. Instead, they are embracing Kubernetes itself as a substrate for storage and data management.

.png?width=2400&name=Untitled%20design%20(41).png)

So, why is that? And how is it that after many years of effort OpenEBS has become only now become a bit of an overnight sensation? I thought a lot about this during the flights back to the US and the long waits while going through customs. The simplest explanation I can give is this:

At MayaData we started OpenEBS because we never believed storage for Kubernetes was simply a problem that needed to be solved — rather we have always seen it as an opportunity to be seized.

When we first set out many years ago, as a group we were very familiar with the promises and challenges of software defined storage. We knew that from what we tried to build at companies like Nexenta, CloudByte, HPE and Dell. And we knew how our feet of clay — the technologies our software itself was written in, deployed in and operated upon — kept us from delivering storage that was truly software defined.

So, we asked ourselves — what if storage FOR cloud native environments was ITSELF cloud native? More importantly, we asked hundreds of users about this.

And we didn’t just ask; we gave them some code to try. We started with a simple, naive storage approach — starting with an orphaned Go project that put storage in containers started by Rancher called Longhorn — and we wrapped it with logic to make it easier to deploy and operate. We put our experiment in the hands of users and watched and listened. We learned a lot very quickly and found that our initial thesis was by and large validated. There were new users with new use cases emerging as Kuberntes and containerization proliferates across on premise and cloud environments, and these use cases promised fundamentally better and even more agile ways of building and running software that includes stateful workloads. The adoption of what we call Jiva taught us a lot and led us to build our next storage engine, cStor, that added many additional capabilities including far superior data integrity and data resilience.

Now, what are these data agility use cases? What can users get done now that they simply could not accomplish with API connected storage or data? Why is it that storage for Kubernetes is more of an opportunity than simply a problem in need of fixing? I won’t go through all of these here, it would be better for you to join the OpenEBS slack channel or ask around about how and why everyone from tier one financials to national defense organizations to large SaaS operators and others are using OpenEBS to accelerate growth and resilience while minimizing costs.

Here are a few reasons we heard many times last week at KubeCon:

What’s Mine is Mine and what’s Yours is Yours: Small Team Autonomy

- Conway’s Law is now almost commonplace, and we hear it all the time when we ask “but why?” a few times in a row to users. The upshot is that if you use underlying shared storage, it is run by somebody else with the collective needs of an entire organization in mind. And if you need it tweaked for your Kafka or some other use case then, well, meetings.

Distributed Turtles all the Way Down

- Yet another familiar idea is turtles all the way down. These days we hear from users that they’ve chosen Kubernetes as the grounding of the world (ok maybe a little virtualization under that). Below that they want metal or cloud volumes (bedrock). But if you rely solely upon an external distributed storage system, then you are actually running on another distributed system that has its own challenges and quirks and was designed in a time when granite tablets were used for storage (or at least when low IO disk drives predominated). These systems were designed for persistent workloads that, as you know, persisted and certainly didn’t move around again and again and again — hence the notorious attach / detach problems experienced by many such systems. By comparison, when you use OpenEBS you leverage Kubernetes itself as a layer of storage management.

Freedom from Lock-in: Freedom to Innovate

- Kubernetes offers users a level of freedom from lock-in that is less about saving money and more about being able to choose the right solution for the task at hand. Yet when it comes to stateful workloads, if you simply connect via some APIs to an external system you are relying on the system on the other end of the API to behave the same way whether it is invoked on premise or on cloud A, B or C. And, spoiler alert, they won’t ever behave the same. By comparison, OpenEBS ensures you have not just the same API but the same behaviors anywhere you run. And to forestall next level lock-in via proprietary APIs that are outside the scope of CSI, we are helping by ramping up the KubeMove initiative to address use cases such as MOVE and RECOVER that are otherwise only available from ourselves and other single vendor approaches.

I hope that one thing we’ve shown with OpenEBS and other open source work at MayaData, such as Litmus for chaos engineering, is that while our Twitter and, of course, mascot and sticker game is strong, so is our code! It’s with that same invigorating spirit that I write this post.

In less than 3 minutes, you too can be running OpenEBS on whatever Kubernetes you have lying around. There are no special settings required; no kernel modules that could violate your RHEL support should you be thinking ahead, although OpenEBS does primarily use iSCSI at the moment. So, enough thought leadership blog stuff — take the code out for a run today.

$ helm install stable/openEBS — name openebs — namespace openebs

And thanks again everyone for validating OpenEBS over the last few years. Together we’re showing the world that storage for Kubernetes doesn’t just solve a problem, it’s an opportunity to do the right stuff faster and with more agility.

If you are interested in what others have said recently about OpenEBS and MayaData, please take a look at the Twitter feed. Some interesting articles include:

- https://thenewstack.io/kubemove-move-data-across-kubernetes-clusters-without-proprietary-extensions/

- https://siliconangle.com/2019/05/24/kubecon-kubernetes-ecosystem-goes-cloud-native/

You can also check out the press release announcing new investments, joining CNCF and especially OpenEBS 0.9:

- https://www.prnewswire.com/news-releases/openebs-accepted-into-cncf-and-openebs-0-9-released-300853066.html

This article was first published on May 26, 2019 on MayaData's Medium Account.

Game changer in Container and Storage Paradigm- MayaData gets acquired by DataCore Software

Don Williams

Don Williams

Managing Ephemeral Storage on Kubernetes with OpenEBS

Kiran Mova

Kiran Mova

Understanding Persistent Volumes and PVCs in Kubernetes & OpenEBS

Murat Karslioglu

Murat Karslioglu