One of the standard requirements of any useful Chaos Engineering tool is the ability to stop an experiment dead in its tracks. The big red button. Often, it is needed to avoid the repercussions of a hypothesis gone wrong, to limit blast radius or just for debugging purposes (especially when constructing new experiments). This is especially important for SREs while conducting gamedays or injecting faults in big shared clusters (pre-prod, staging, prod anyone?). In 1.1.0, Litmus lays the foundation for supporting immediate termination of in-flight experiments via a simple patch command to the ChaosEngine resource. This, coupled with the standard fare of new experiments, schema enhancements, RBAC optimizations & docs improvements are what the 1.1.0 release is made up of. Let’s take a look at these changes in detail.

Cut the Chaos, will you?

One of the features (and thereby a challenge) with Litmus, is that the chaos-experiment run is achieved by multiple disaggregated components, serving a unique purpose. The chaos-runner, which is a lifecycle manager of the “experiments'' listed in the chaos engine, the experiment job which executes the pre & post-chaos health checks and the experiment business logic & in some cases the helper deployments (such as PowerfulSeal, Pumba). The entity that ties these together is, of course, the chaos engine and therefore is expected to carry the state that is controlled by the developer/user. An abort of the experiment would mean immediate removal of all the “chaos resources” associated with the experiment. Another requirement, in such cases, is to ensure that the “user-facing” resource is not removed until all the children are successfully removed.

To satisfy these requirements, the chaos operator has been improved to support a .spec.engineState flag in the chaos engine CR (“active” by default), which can be patched (“stop”) to abort the experiment. The reconcile operation deletes all the child resources (with a graceperiod of 0s), identified on the basis of a “chaos lineage” (the UUID of the chaos engine, as a label) that is passed to all the experiment resources. A finalizer set on the chaos engine upon creation is removed once all the resources are terminated. The same workflow is executed upon a standard delete operation of the chaos engine. While this satisfies most requirements of abort, improvements remain around cases where the injection of chaos is via triggering of processes within the target containers of applications under test (AUT). These are being worked on for subsequent releases!

kubectl patch chaosengine -n --type merge --patch '{"spec":{"engineState":"stop"}}'The .spec.engineState also takes the value “active”, upon which the experiment is re-triggered. This is especially helpful in cases where the user would like to retain the custom engine schema constructed on the test clusters.

kubectl patch chaosengine -n --type merge --patch '{"spec":{"engineState":"active"}}'OpenEBS validation experiments

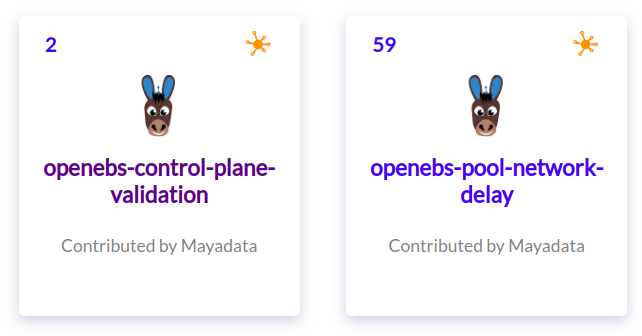

The OpenEBS chart was improved upon with new experiments to aid with the deployment validation throughout the user’s journey in installing the OpenEBS control plane, creating storage pools & then provisioning applications with the cStor persistent volumes. Currently, the experiments are designed to help with testing the resilience of the various components via pod-failures & custom health checks. In upcoming releases, these experiments will be upgraded to contain increased component-specific validations, as the OpenEBS projects publish the same.

In the event of Chaos

Events are one of the important observability aids in any software, and more so in cases of chaos engineering. In 1.1.0, Litmus introduces support for the creation of Kubernetes events around chaos experiments. At this point, events are generated around successful as well as failed creation or termination of a chaos experiment. In subsequent releases, these will be enhanced to generate events around actual chaos injection steps. Also, being worked on is the standardization of these events & support for pushing these events to other monitoring frameworks.

Kubectl describe chaosengine nginx-chaos -n nginx

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Started reconcile for chaosEngine 61s chaos-operator Creating all Chaos resources

Normal Stopped reconcile for chaosEngine 2s (x2 over 75s) chaos-operator Deleting all Chaos resourcesTalking of observability, we have also improved the logging in the chaos-runner via the klog framework.

Why annotate when I know multiple apps will be killed?

Litmus recommends annotating the target applications to ensure a lower blast radius, and that impact is isolated during automated background chaos runs, especially when multiple applications share the same label. However, this premise doesn’t hold good in case of standard infra-chaos operations such as node-drain or node-disk loss, for example. These inherently have a higher blast radius and impact multiple apps in the cluster. Therefore the annotation checks do not make too much sense. There are yet other situations wherein users are confident about the uniqueness of labels used and do not like to tamper with the deployment specs (stringent enforcement of GitOps models with sacrosanct artifacts ). Here too, the feedback was around not making annotations a prerequisite for chaos. This has been addressed by using the .spec.annotationCheck flag (set by default to “true”), which, when set to false, doesn’t check for annotations before proceeding with chaos on the specified app or infra component. In the case of infra experiments, this is typically used in conjunction with the .spec.auxiliaryAppInfo to obtain a list of applications whose health is to be verified.

…

spec:

annotationCheck: 'false'

engineState: 'active'

auxiliaryAppInfo: ''

appinfo:

appns: 'default'

applabel: 'app=nginx'

appkind: 'deployment'

chaosServiceAccount: node-cpu-hog-sa

monitoring: false

…

Can I bring my own volumes to the chaos experiment?

In previous releases of litmus, we added support for defining the configmap & secret volumes containing config information into the chaosexperiment CRs. However, these need to be defined at a namespace level (which is still “global,” considering there might be different chaosengines/instances with different mountpaths or varying number of volumes within the same namespace). With 1.1.0, we have introduced the ability to specify (or override) the volumes from the chaosengine with

.spec.experiments.<exp>.spec.components.<configmap/secret>.

…

experiments:

spec:

- name: openebs-pool-container-failure

spec:

components:

env:

- name: APP_PVC

value: demo-percona-claim

configMaps:

- name: data-persistence-config

value: /mnt

...Where is my chaos treasure?

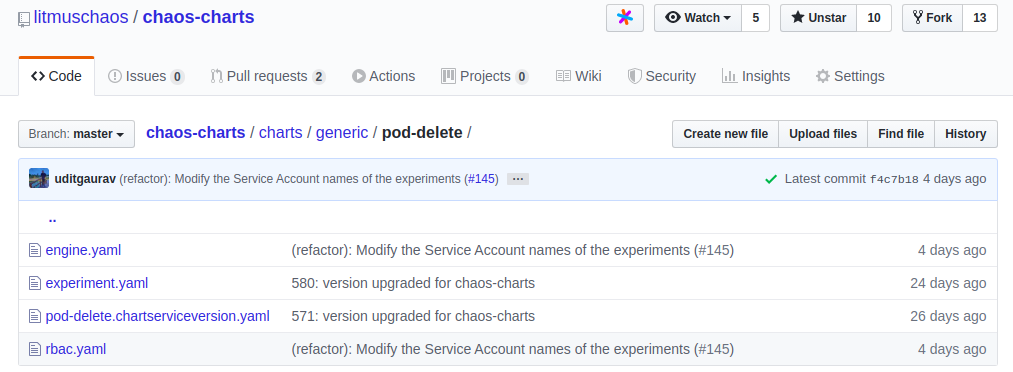

An increasingly frequent feedback we received from the contributors to Litmus was the multiplicity of the Kubernetes manifests (chaos experiment, sample chaosengines, experiment rbac yamls) spread across the various repositories in the LitmusChaos github organization (litmus, docs, charts, litmus-e2e, community), which necessitated multiple pull requests upon schema changes, bug fixes, image updates etc., While the manifests themselves served the purpose of being a quick-aid to users, it was slowly eating productivity. This was addressed in 1.1 with the nifty embedmd tool. All the chaos resources associated with a chaos experiment (the experiment CR/rbac/engine CR) are now maintained in the respective experiment folders in the chaos-charts repo, which is now emerging as the single source of truth with respect to experiment manifests, with all other sources embedding these yamls.

Other notable improvements

Some of the other improvements that made it into 1.1 include the following:

- The chaos operator is now deployed with minimal permissions to perform the CRUD operations against the primary (chaosengine) & secondary (pods, services) resources as against possessing all-encompassing clusterRole with “*/*/*” capabilities.

- Validation (e2e) pipelines with Gingko based BDD tests are now added for OpenEBS experiments.

- An FAQ document with sections on general usage & troubleshooting have been added to aid users with common issues

Conclusion

1.1 was a release in which we learnt quite a bit on user expectations and developer concerns, and tried acting on it via the abort implementation, manifest centralization & also tried to improve the chaos options for the OpenEBS community. In the subsequent releases, too, we will continue to improve on the critical ingredient for open source projects: “Listening” to the community for feedback and making the appropriate changes. Some of the planned items which we should see next include:

- Observability improvements (events & log framework)

- Introduction of proxy-based HTTP/network chaos experiment

- Greater flexibility in the operator/runner to integrate with another chaos framework, along with better documentation around it.

- Improved OpenEBS chaos experiments

As always, a big shoutout to our awesome contributors & users: David Gildeh (@dgildeh), Aman Gupta (@w3aman), Sumit Nagal (@sumitnagal), Laura Henning (@laumiH) for all their feedback & efforts!

MayaData launching ChaosNative for LitmusChaos and more

Evan Powell

Evan Powell

New Kubera Release + Project Features

Paul Burt

Paul Burt

KubeCon EU 2020 - Virtual, Storage chatter round-up

Kiran Mova

Kiran Mova