In Austin KubeCon 2017 I first got a deep look at Weave Scope and could help but fall in love with it. The visualization Scope provides into the Kubernetes resources is simply amazing. It greatly simplifies the tasks required of an Administrator in dealing with the clutter of Kubernetes components. It also moves directly towards the component of interest and allows you to start observing and managing it.

After being tasked with the goal of simplifying storage management for Kubernetes, my immediate thought was, “Why can’t we use Scope for Kubernetes storage?” Of course, storage in Kubernetes is an area of development and new features are always coming, but the existing adoption of Kubernetes persistent storage volumes (PVs) concept was already very large. Therefore, we thought it warranted extensions to Scope to include PVs.

We quickly got to work and, with the help of Alexis and the Weave team, we started coding!

We established multiple milestones for this journey:

- First, get the persistent volumes (PVs), persistent volume claims (PVCs) and Storage Classes (SCs) into Scope.

- Second, add snapshot/clone support and start monitoring the volume metrics.

- Third, bring in the disk or SSD or similar as a fundamental resource that is being managed by the Administrator, just like they might want to sometimes take a look at CPU and Memory.

Persistent Volumes (PVs)

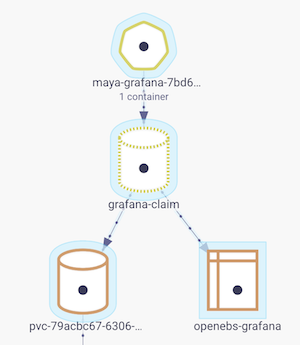

Most of the time, Persistent Volume Claims (PVCs) are the entry points to increasing the storage. The number of PVCs will be about the same as the number of pods, or slightly less in a reasonably-loaded Kubernetes cluster. The administrator will benefit from having visibility of which POD is using which PVCs and the associated storage classes and PVs. This is especially true if they are using the storage capacity of the Kubernetes clusters themselves. Adding this visibility is precisely is what we did to start.

PVC-PV-SC-POD Relationship on Scope

PVC-PV-SC-POD Relationship on Scope

You can see this new visibility in Scope by using the newly-created filter “Show storage/Hide storage” under the PODs section. This filter puts the storage components in perspective with the remaining pods and associated networked-data connections. Users can Hide storage when not interested, or to reduce clutter.

We received an enthusiastic welcome to the Scope community from the Weaveworks team. We also found encouragement from Alexis and plenty of technical help from Bryan at Weaveworks. The first pull request (PR) was really about adding PV, PVC and Storage Class support, and was merged into the Weave Scope master recently (https://github.com/weaveworks/scope/pull/3132 ).

PV-PVC-SC Integration into Scope

Future work:

Snapshots and Clones

CI/CD pipelines are the most active areas in which DevOps are finding stateful applications on Kubernetes to be immediately applicable. Storing the state of a database at the end of each pipeline stage, and restoring them when required, is a commonly performed task. The state of the stateful application is stored by taking snapshots of its persistent volumes and is restored by creating clones of persistent volumes. We believe that offering visibility and administrative capabilities to manage snapshots and clones in Scope is a natural next step.

Disk Management and Monitoring

Hyper-converged Infrastructure (HCI) has yet to find its rhythm with Kubernetes, largely due to a lack of fully-developed tools for disk management and monitoring. Kubernetes now has a well-accepted method to provision and manage volumes and attach them to disk management. Therefore, the enabling of HCI for Kubernetes will be improved by new tools such as Node Disk Manager (NDM), to which, incidentally (humble brag), we are also contributing. With Disk being the fundamental component for storage and the main participant in the chaos engineering of storage infrastructure, it helps to have it visualised and monitored in a proper way. In large Kubernetes clusters (100+) nodes, the disks will be in the thousands. Scope’s resource utilisation panel is a powerful tool that brings in the visibility of CPU and Memory utilisation at the Host, Container and Process level. This is a natural extension to add Disk Capacity, Disk performance (IOPS and throughput) to this resource utilisation tool. Our view is shown in the figure below, that Disk performance can be added.

Current View of the Resource Utilisation Tool on Scope

Another important aspect of disk management is simply browsing from the application volume all the way to the disk where the data is stored. It is not possible to locate the actual disk of a persistent volume if the underlying storage is a cloud-disk such as EBS or GPD, but if it is a Kubernetes local PV or OpenEBS volume, the volume data vs. physical disks relationship can be identified. This will be useful while managing the hyper-converged infrastructure on Kubernetes.

(Future work) PODs/Disks and Nodes Relationship at Scope

The above screens are a dirty implementation on a dev branch that is still in process. However, it provides a good, quick glimpse of how a POD’s volume is linked to the associated disks.

Weaveworks team recently started community meetings led by Fons, and it appears to be a great beginning of broader community involvement into the development of Scope. You can access the public meeting notes at

https://docs.google.com/document/d/103_60TuEkfkhz_h2krrPJH8QOx-vRnPpbcCZqrddE1s/edit?usp=sharing

Summary

Weave Scope is a very useful tool for Kubernetes administrators for visualising and basic administration. With the addition of extensions being added, and a wider community being formed, Scope’s adoption will certainly increase and benefit the Kubernetes eco-system. We are looking forward to being an active contributor to this excellent visualisation tool.

Please provide any feedback here or in the next Scope community meeting. We will be there!

Thanks to Akash Srivastava and Satyam Zode.

This article was first published on Jun 28, 2018 on OpenEBS's Medium Account

Managing Ephemeral Storage on Kubernetes with OpenEBS

Kiran Mova

Kiran Mova

Understanding Persistent Volumes and PVCs in Kubernetes & OpenEBS

Murat Karslioglu

Murat Karslioglu

Why OpenEBS 3.0 for Kubernetes and Storage?

Kiran Mova

Kiran Mova