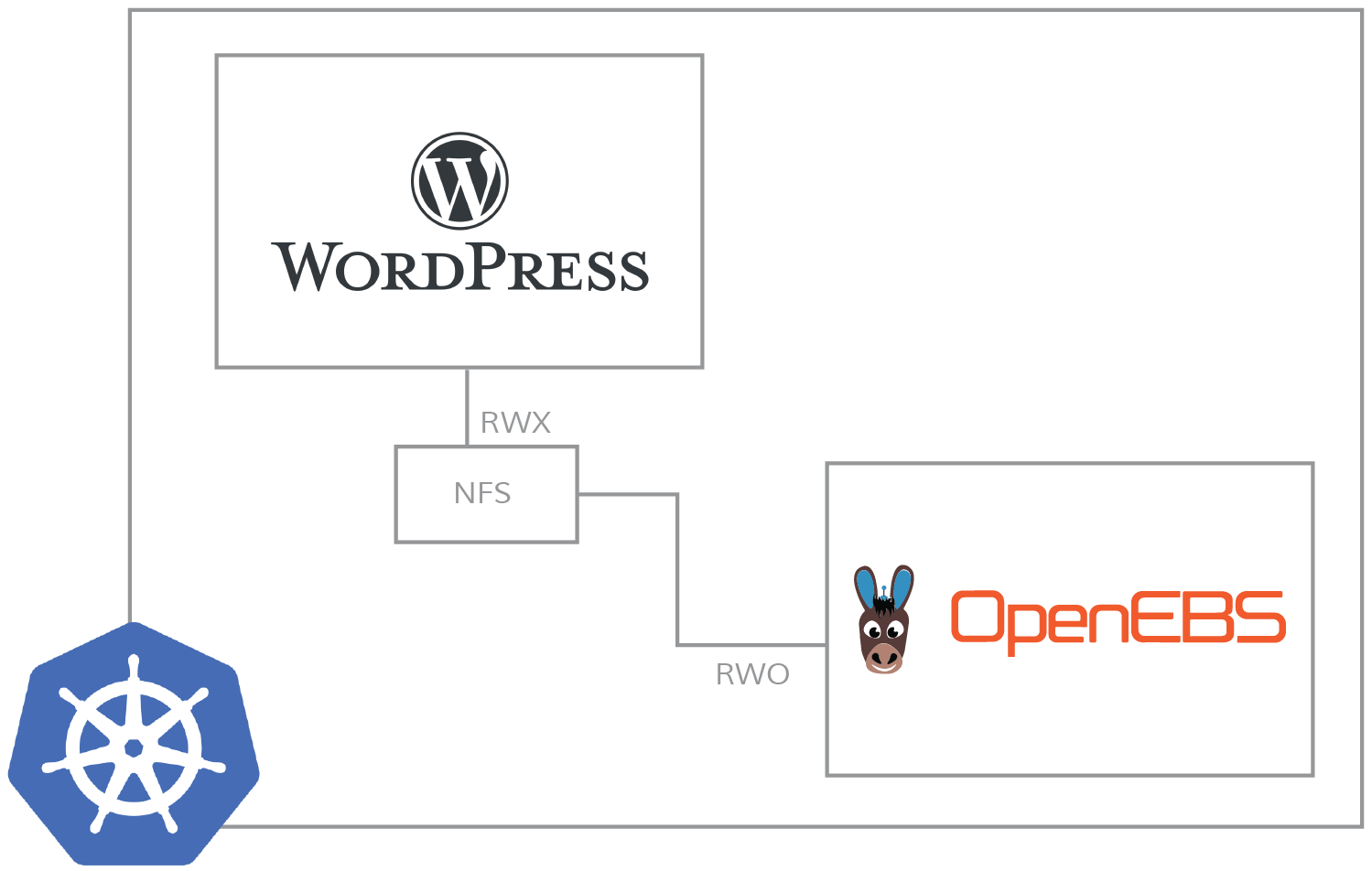

OpenEBS doesn’t support RWM out of the box, but it can be used with an NFS provisioner to satisfy RWM requirements. NFS provisioner is similar to an application, meaning it consumes OpenEBS volumes as to its persistent volume and provides a way to share the volume between many applications.

In this post, I would like to discuss the steps needed to run NFS on top of OpenEBS Jiva for RWM use cases. We will cover this in three sections: first, we’ll look at the installation of Jiva volumes, then the installation of NFS Provisioner, and finally, provisioning of the shared (RWM) PV for busybox (application). Do note that detailed explanations related to NFS provisioner are outside the scope of this blog.

Installing Jiva Volumes

Installing and setting up OpenEBS Jiva volumes is rather easy. First, you need to install the control plane components required for provisioning the Jiva volumes. After installing the OpenEBS control plane components, create a storageclass and PVC to be mounted into NFS Provisioner deployment.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: openebs-nfs

namespace: nfs

spec:

storageClassName: openebs-jiva-default

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "10G"

The YAML spec shown above for PVC uses openebs-jiva-default storageclass, which is created through the control plane. For further details, please refer to this documentation.

Installing NFS Provisioner

There are three steps needed to install the NFS provisioner, and you can also refer to the NFS Provisioner docs.

- NFS installation requires a Pod security policy (PSP) that provides specific privileges to NFS provisioner.

apiVersion: extensions/v1beta1

kind: PodSecurityPolicy

metadata:

name: nfs-provisioner

namespace: nfs

spec:

fsGroup:

rule: RunAsAny

allowedCapabilities:

- DAC_READ_SEARCH

- SYS_RESOURCE

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- configMap

- downwardAPI

- emptyDir

- persistentVolumeClaim

- secret

- hostPath

- Set up clusterroles and clusterrole binding for NFS Provisioner to provide access to the various Kubernetes-specific API’s for volume provisioning.

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-provisioner

namespace: nfs

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services", "endpoints"]

verbs: ["get"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-provisioner

namespace: nfs

subjects:

- kind: ServiceAccount

name: nfs-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

roleRef:

kind: ClusterRole

name: nfs-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-provisioner

subjects:

- kind: ServiceAccount

name: nfs-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs

roleRef:

kind: Role

name: leader-locking-nfs-provisioner

apiGroup: rbac.authorization.k8s.io

- Add the PVC details created during the installation of Jiva components into the deployment of the NFS provisioner.

kind: Service

apiVersion: v1

metadata:

name: nfs-provisioner

namespace: nfs

labels:

app: nfs-provisioner

spec:

ports:

- name: nfs

port: 2049

- name: mountd

port: 20048

- name: rpcbind

port: 111

- name: rpcbind-udp

port: 111

protocol: UDP

selector:

app: nfs-provisioner

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-provisioner

namespace: nfs

spec:

selector:

matchLabels:

app: nfs-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-provisioner

spec:

serviceAccount: nfs-provisioner

containers:

- name: nfs-provisioner

image: quay.io/kubernetes_incubator/nfs-provisioner:latest

ports:

- name: nfs

containerPort: 2049

- name: mountd

containerPort: 20048

- name: rpcbind

containerPort: 111

- name: rpcbind-udp

containerPort: 111

protocol: UDP

securityContext:

capabilities:

add:

- DAC_READ_SEARCH

- SYS_RESOURCE

args:

- "-provisioner=openebs.io/nfs"

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: SERVICE_NAME

value: nfs-provisioner

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: export-volume

mountPath: /export

volumes:

- name: export-volume

persistentVolumeClaim:

claimName: openebs-nfs

Installing the Application

Deploying an application requires a storageclass that points to the provisioner openebs.io/nfs for dynamic provisioning of the RWM volumes provisioned by NFS Provisioner.

- Create storageclass and PVC for applications that can point to openebs-nfs-provisioner for creating PVC using RWM access mode.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-nfs

provisioner: openebs.io/nfs

mountOptions:

- vers=4.1

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs

annotations:

volume.beta.kubernetes.io/storage-class: "openebs-nfs"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

- Now deploy two busybox pods to read and write the data.

kind: Pod

apiVersion: v1

metadata:

name: write-pod

spec:

containers:

- name: write-pod

image: gcr.io/google_containers/busybox:1.24

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: nfs

---

kind: Pod

apiVersion: v1

metadata:

name: read-pod

spec:

containers:

- name: read-pod

image: gcr.io/google_containers/busybox:1.24

command:

- "/bin/sh"

args:

- "-c"

- "test -f /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: nfsAs you can see, both of these busybox pods use the same claim-name nfs to access the volume in RWM access mode. The write-pod creates a file /mnt/SUCCESS, whereas the read-pod verifies the existence of /mnt/SUCCESS. After the successful deployment of the busybox pods, both pods move into the completed state.

Note: Though Jiva volumes can be configured for HA, applications may fail to be scheduled if NFS Provisioner goes down. Here, NFS Provisioner is a single point of failure. This means that PVC availability depends on the availability of the NFS Provisioner pod, even when the Jiva replica and controller pods are healthy.

OpenEBS Jiva volumes can be used for RWM use cases with the help of NFS Provisioner. You can deploy any stateful workload that requires RWM access mode using the approach discussed above.

For feedback or commentary, feel free to comment on this blog or reach out to us on our Slack channel.

That’s all folks! 😊

~ उत्कर्ष_उवाच

Ref:

- https://github.com/kubernetes-incubator/external-storage/tree/master/nfs

- https://docs.openebs.io/docs/next/jivaguide.html

Game changer in Container and Storage Paradigm- MayaData gets acquired by DataCore Software

Don Williams

Don Williams

Managing Ephemeral Storage on Kubernetes with OpenEBS

Kiran Mova

Kiran Mova

Understanding Persistent Volumes and PVCs in Kubernetes & OpenEBS

Murat Karslioglu

Murat Karslioglu