Updated September 7th 2021: This blog is updated with the the latest guide on OpenEBS Local PV, please refer to https://github.com/openebs/dynamic-localpv-provisioner.

How to run the ElasticSearch operator (ECK) using OpenEBS LocalPV auto-provisioned disks.

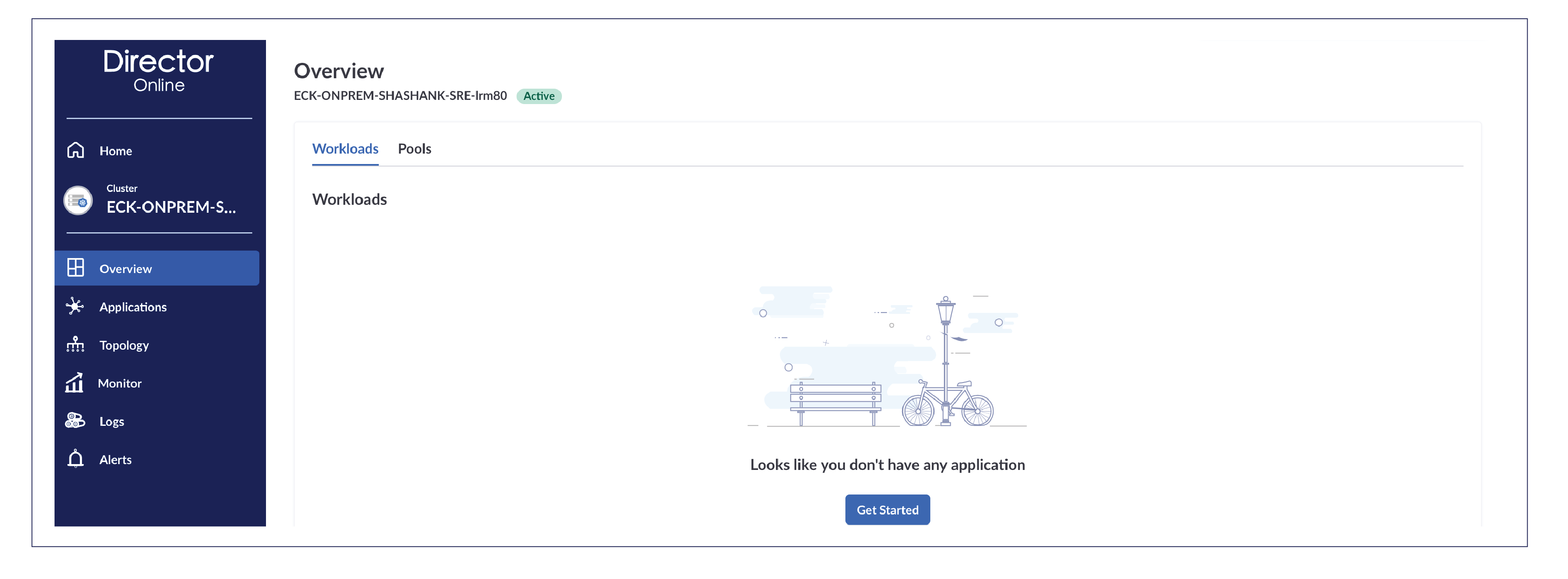

In the previous blog, we discussed the advantages of using OpenEBS LocalPV with the new operator for ElasticSearch, ECK. In this blog article, we will give step-by-step instructions to get started with deploying ElasticSearch, connecting it to MayaData DirectorOnline for free monitoring and scaling up the ElasticSearch cluster with auto-provisioning of the disks.

.png?width=2400&name=Untitled%20design%20(12).png)

Prepare your cluster for monitoring

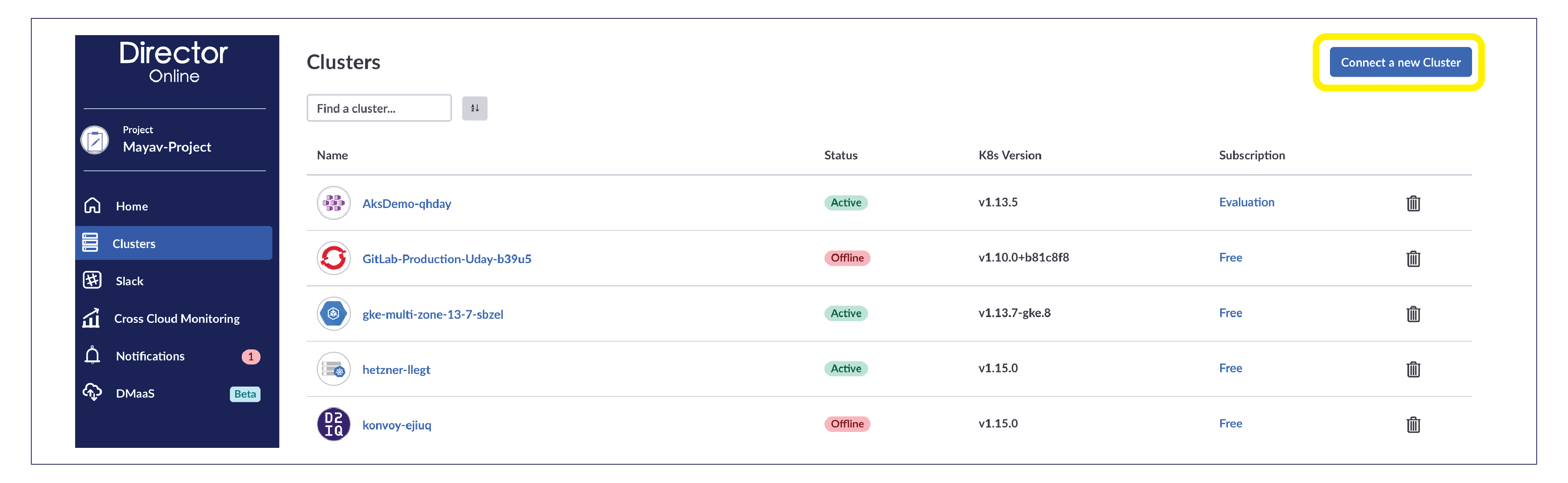

OpenEBS Director gives a free forever tier for Kubernetes visibility (monitoring, logging and topology views). Start your Kubernetes visibility journey by connecting your Kubernetes to OpenEBS Director. Example steps are shown below.

1. Sign in using GitHub or Google or username/password

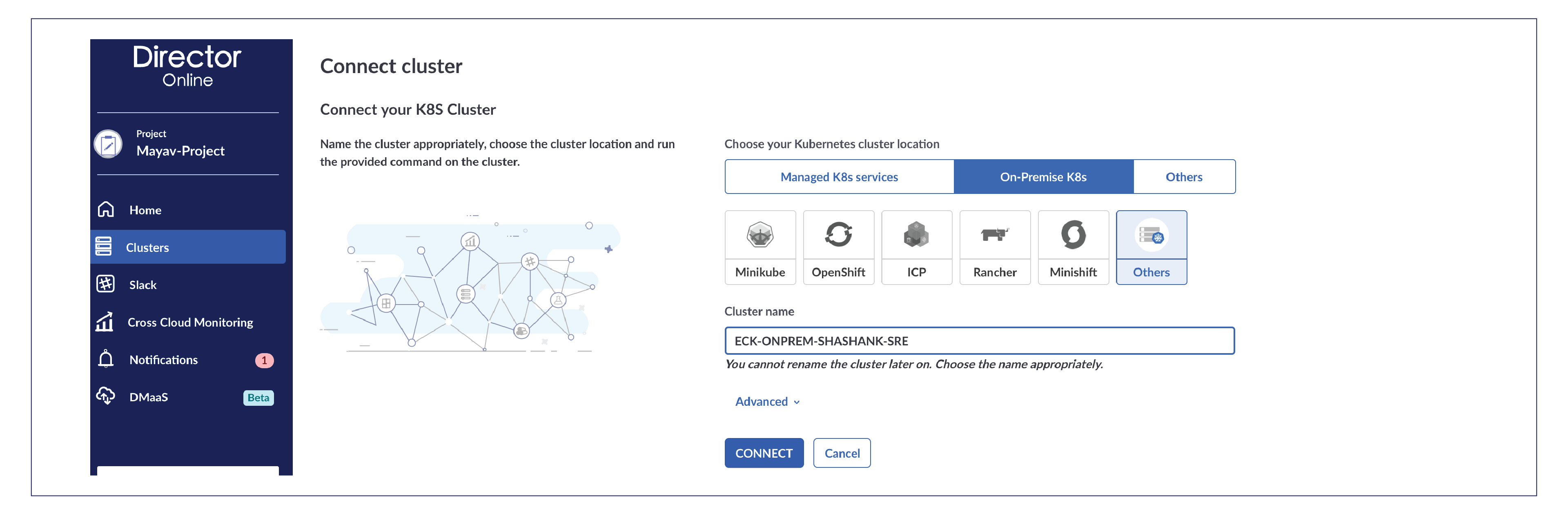

2. Choose the location of your cluster and try to connect

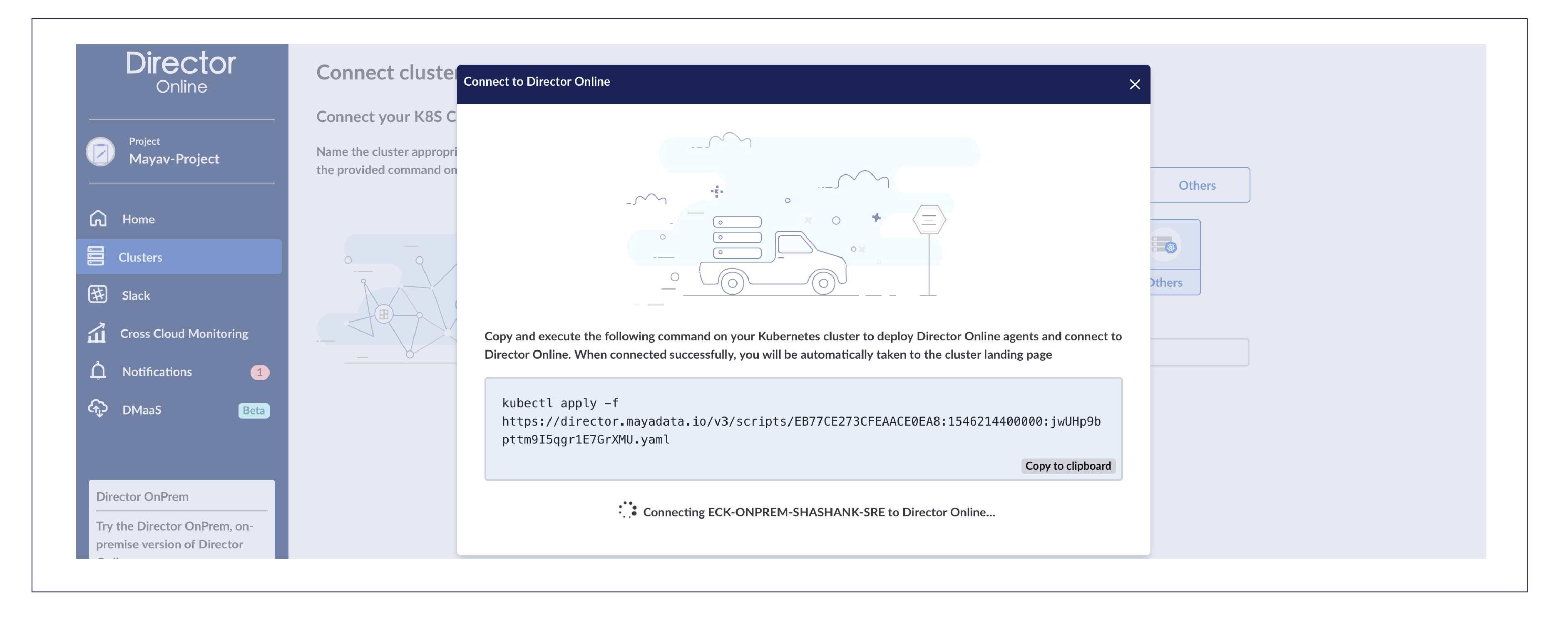

3. You will be offered a command that you need to run on your Kubernetes shell for auto-connecting

root@openebs-ci-master:~# kubectl apply -f https://director.mayadata.io/v3/scripts/EB77CE273CFEAACE0EA8:1546214400000:jwUHp9bpttm9I5qgr1E7GrXMU.yaml

namespace/maya-system created

limitrange/maya-system-limit-range created

serviceaccount/maya-io created

clusterrolebinding.rbac.authorization.k8s.io/maya-io created

secret/maya-credentials-aaca9c75 created

job.batch/cluster-register created

daemonset.extensions/maya-io-agent created

deployment.apps/status-agent created

deployment.apps/kube-state-metrics created

service/kube-state-metrics created

configmap/fluentd-forwarder created

configmap/fluentd-aggregator created

deployment.apps/fluentd-aggregator created

service/fluentd-aggregator created

daemonset.apps/fluentd-forwarder created

configmap/cortex-agent-config created

deployment.extensions/cortex-agent created

service/cortex-agent-service created

deployment.apps/weave-scope-app created

service/weave-scope-app created

daemonset.extensions/weave-scope-agent created

deployment.apps/upgrade-controller created

root@openebs-ci-master:~#

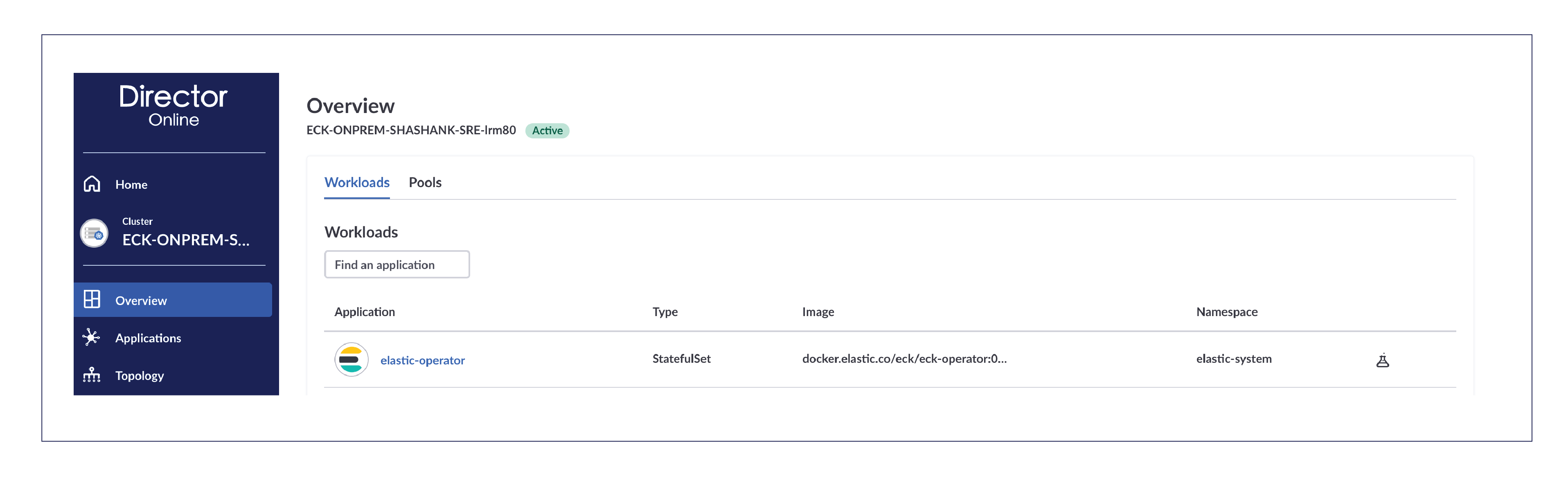

4. Once the command is executed, your cluster is connected and existing applications are displayed if any are discovered.

Install OpenEBS

Choose the default installation mode of OpenEBS. Run the following command as Kubernetes admin.

helm install --namespace openebs --name openebs stable/openebs --version 1.1.0Disk Pre-requisites

OpenEBS LocalPV provisioning requires the disks to be available on the nodes on which the ElasticSearch data pods are going to be scheduled. If more than one disk is available, OpenEBS LocalPV provisioner chooses the appropriate disk based on the requested size. So, the pre-requisite is to have at least one free disk (not mounted) on the node.

As seen on Kubernetes shell:

root@openebs-ci-master:~# kubectl get bd -n openebs -o wide

NAME SIZE CLAIMSTATE STATUS AGE

blockdevice-3735b49b888bbf54406240a44aa7ed55 107374182400 Unclaimed Active 22h

blockdevice-b63e2e62040513698454d33818a5f630 107374182400 Unclaimed Active 22h

blockdevice-c2eb81ef31f4ca8cb1885aee675c23d0 107374182400 Unclaimed Active 22h

blockdevice-c3e24a19d11dd5ee1478c7bd8bf1ebdc 107374182400 Unclaimed Active 22h

blockdevice-e4faa44472434b2f8c3e0ef9533d3032 107374182400 Unclaimed Active 22h

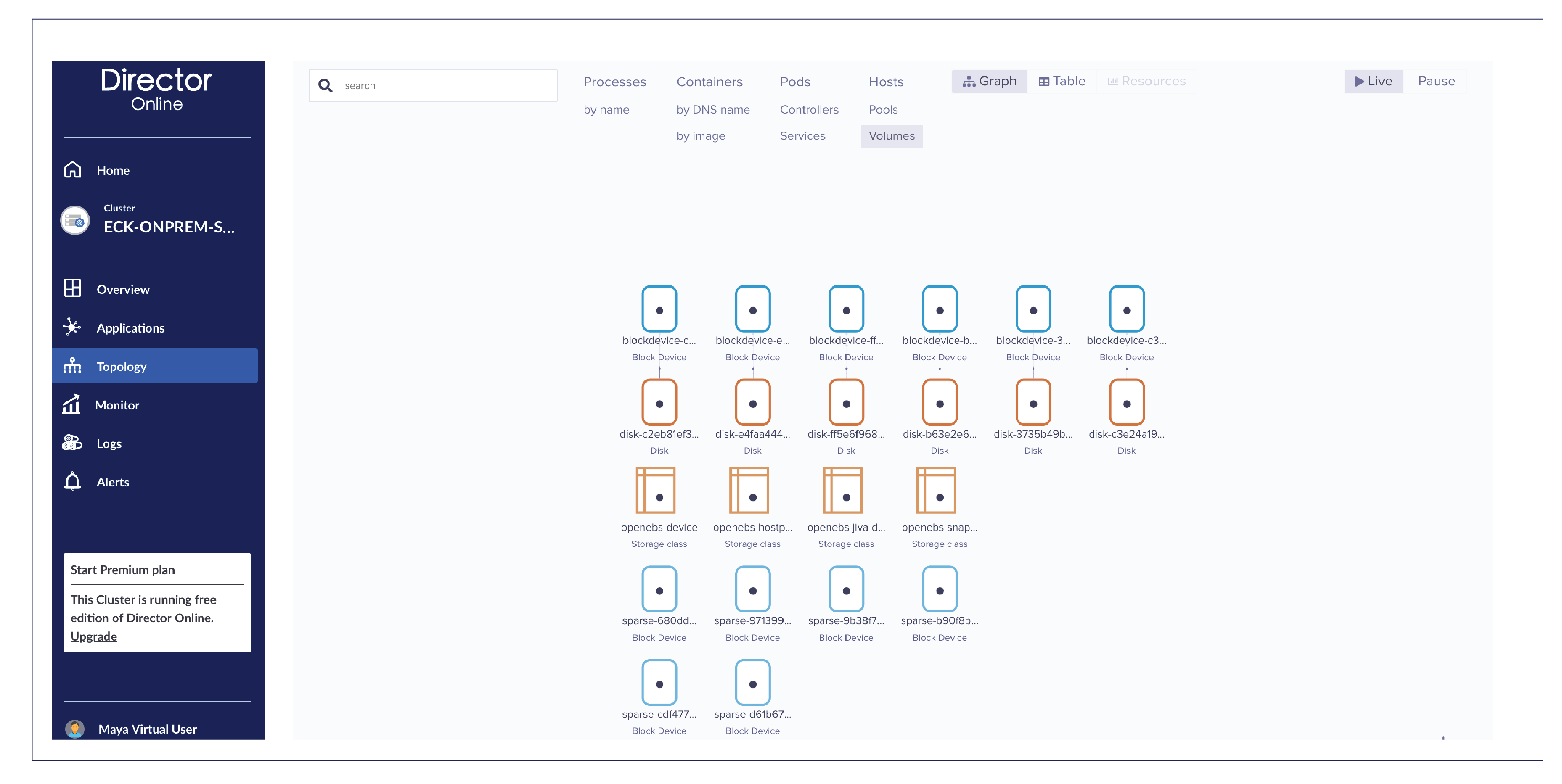

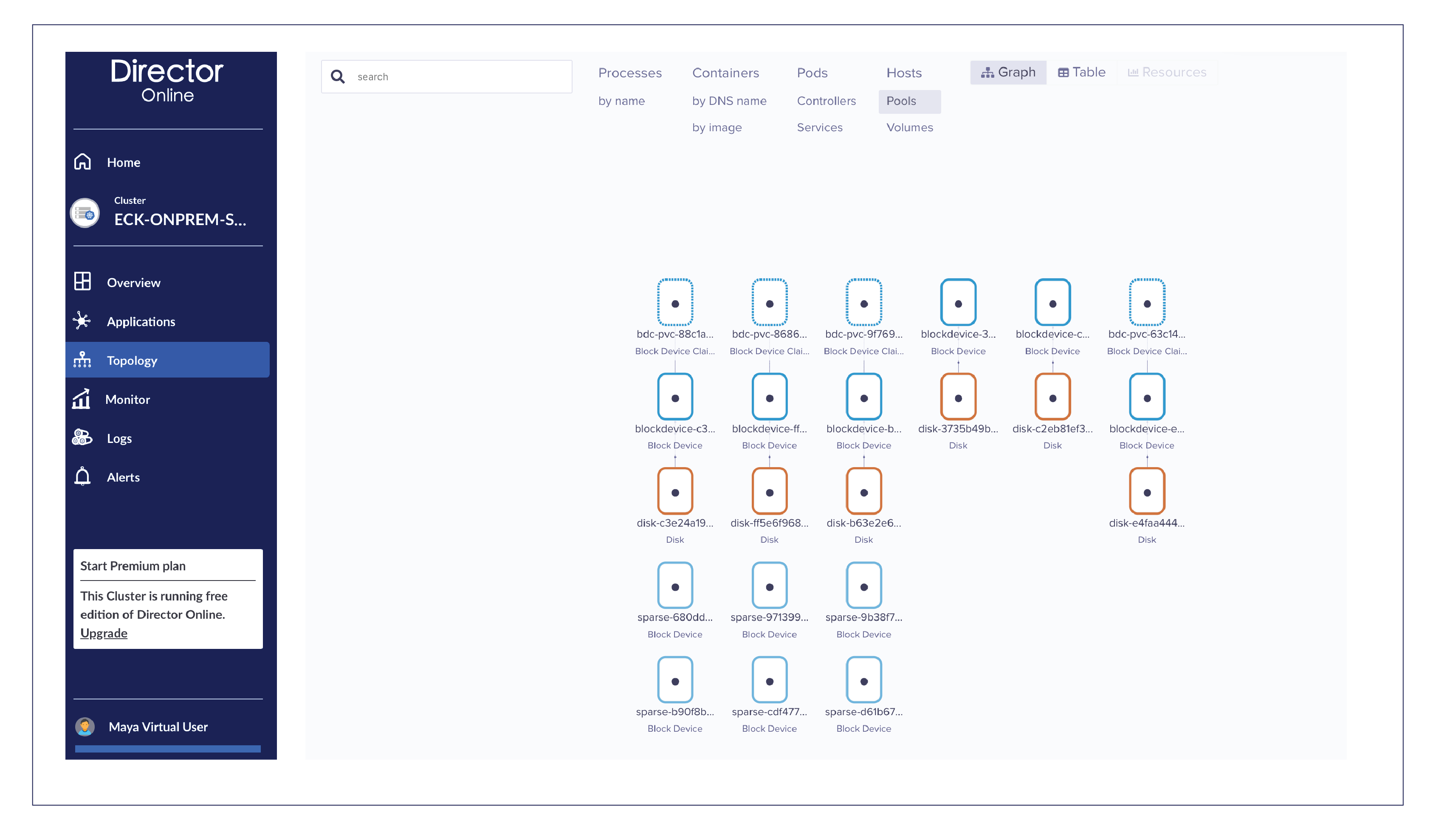

blockdevice-ff5e6f96801909b8a572bc92a5e5c11c 107374182400 Unclaimed Active 22hAs seen on OpenEBS Director (Cluster -> Topology -> Hosts)

There are six worker nodes with an unclaimed disk on each node.

Each of them is unclaimed as seen below:

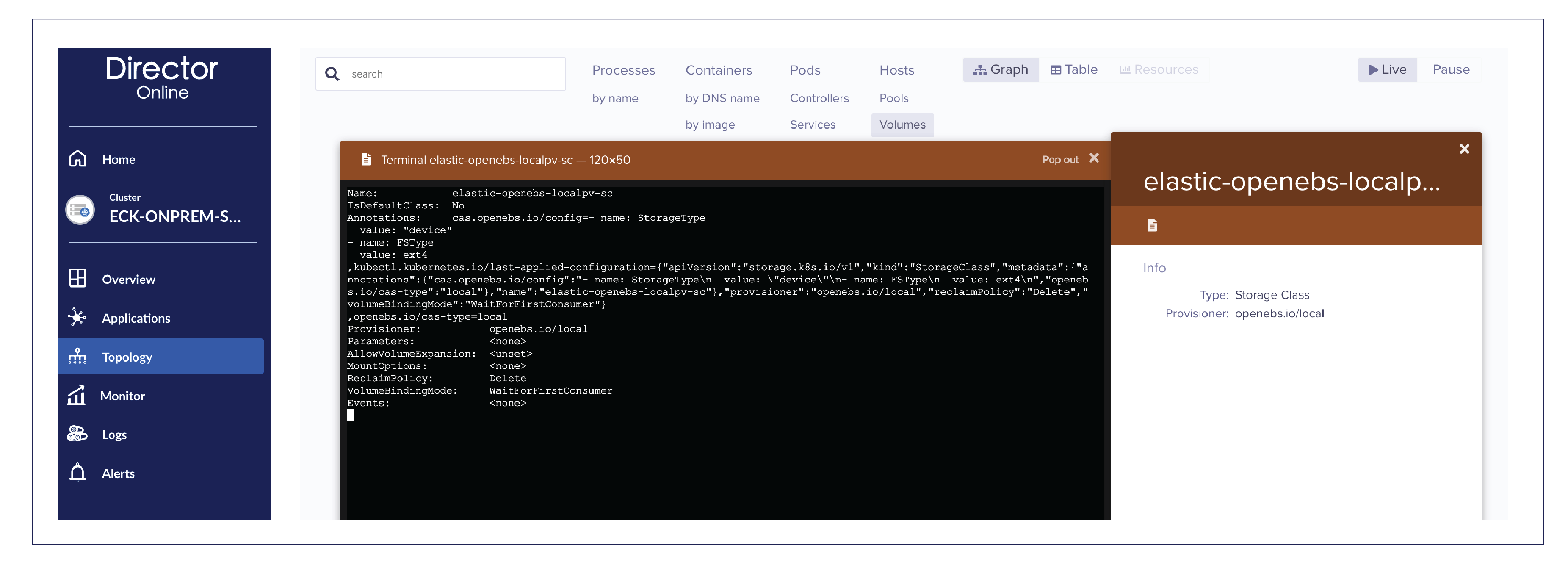

Prepare OpenEBS LocalPV StorageClass to be used in your ECK operator

Create a LocalPV storageClass on your Kubernetes

cat <<EOF | kubectl apply -f -

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: elastic-openebs-localpv-sc

annotations:

openebs.io/cas-type: local

cas.openebs.io/config: |

- name: StorageType

value: "device"

- name: FSType

value: ext4

provisioner: openebs.io/local

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

EOF

Note: You can also use OpenEBS LocalPV in hostpath mode if you are sharing the underlying disk with other applications.

You need to append or use this StorageClass elastic-openebs-localpv-sc in the ECK operator as shown later in this blog.

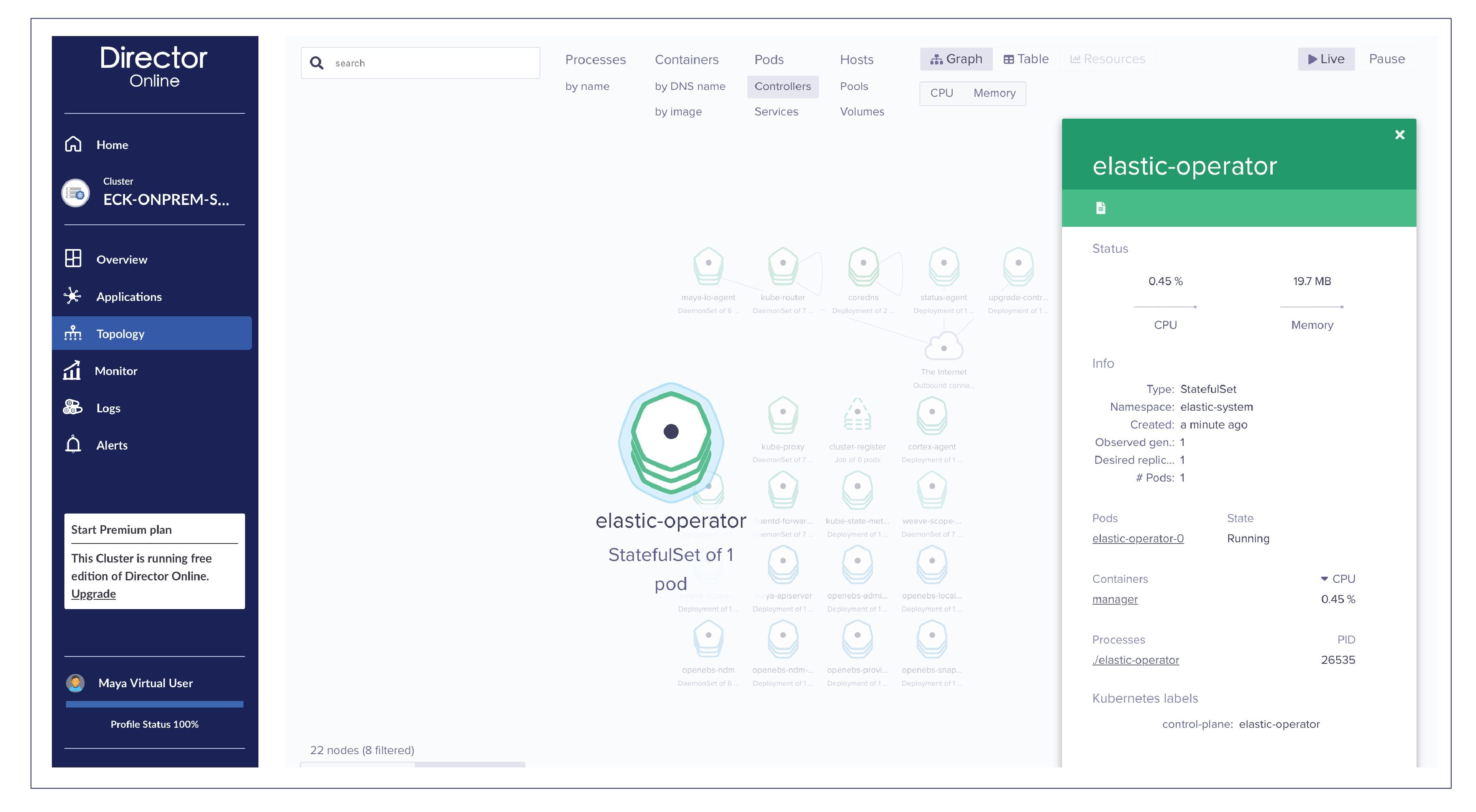

Get Started with the ECK Operator

First, install the CRDs and the operator itself.

(https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-quickstart.html#k8s-deploy-elasticsearch)

kubectl apply -f https://download.elastic.co/downloads/eck/0.9.0/all-in-one.yaml

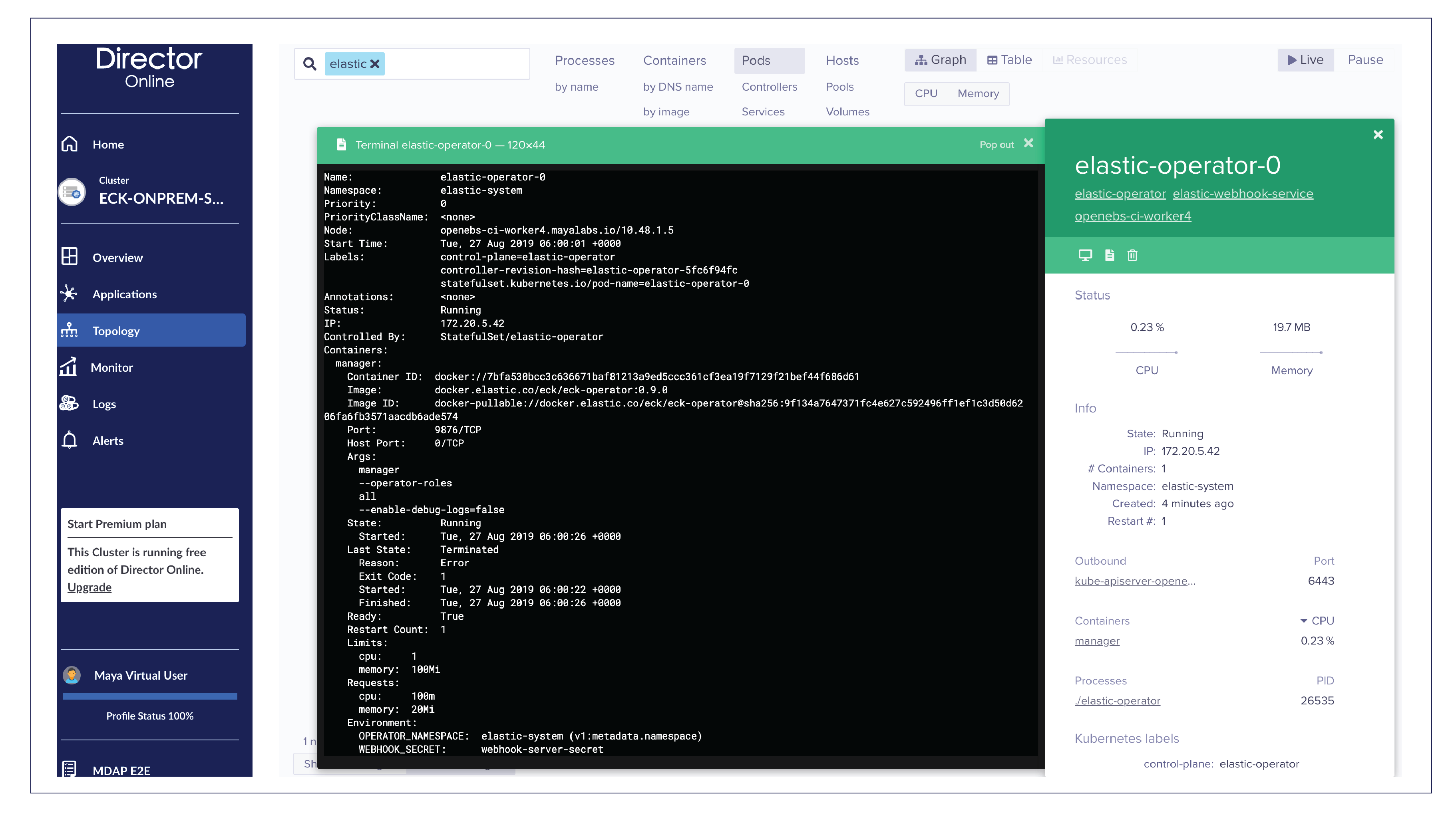

Verify if the elastic-operator is running

Start ElasticSearch using ECK with OpenEBS LocalPV storage class

cat <<EOF | kubectl apply -f -

apiVersion: elasticsearch.k8s.elastic.co/v1alpha1

kind: Elasticsearch

metadata:

name: openebs-ci-elastic

spec:

version: 7.2.0

nodes:

- nodeCount: 3

config:

node.master: true

node.data: true

node.ingest: true

volumeClaimTemplates:

- metadata:

name: elasticsearch-data # note: elasticsearch-data must be the name of the Elasticsearch volume

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 90Gi

storageClassName: elastic-openebs-localpv-sc

EOF

Note: Storage specific configurations include only storage size and StorageClass name

storage: 90GistorageClassName:elastic-openebs-localpv-sc

Verifying ElasticSearch

kubectl get elastic

NAME HEALTH NODES VERSION PHASE AGE

elasticsearch.elasticsearch.k8s.elastic.co/openebs-ci-elastic green 3 7.2.0 Operational 106s

We have used NodeCount as 3. On the three Kubernetes worker nodes, OpenEBS LocalPV provisioner would have mounted the matching disks as required for each ElasticSearch Data Node.

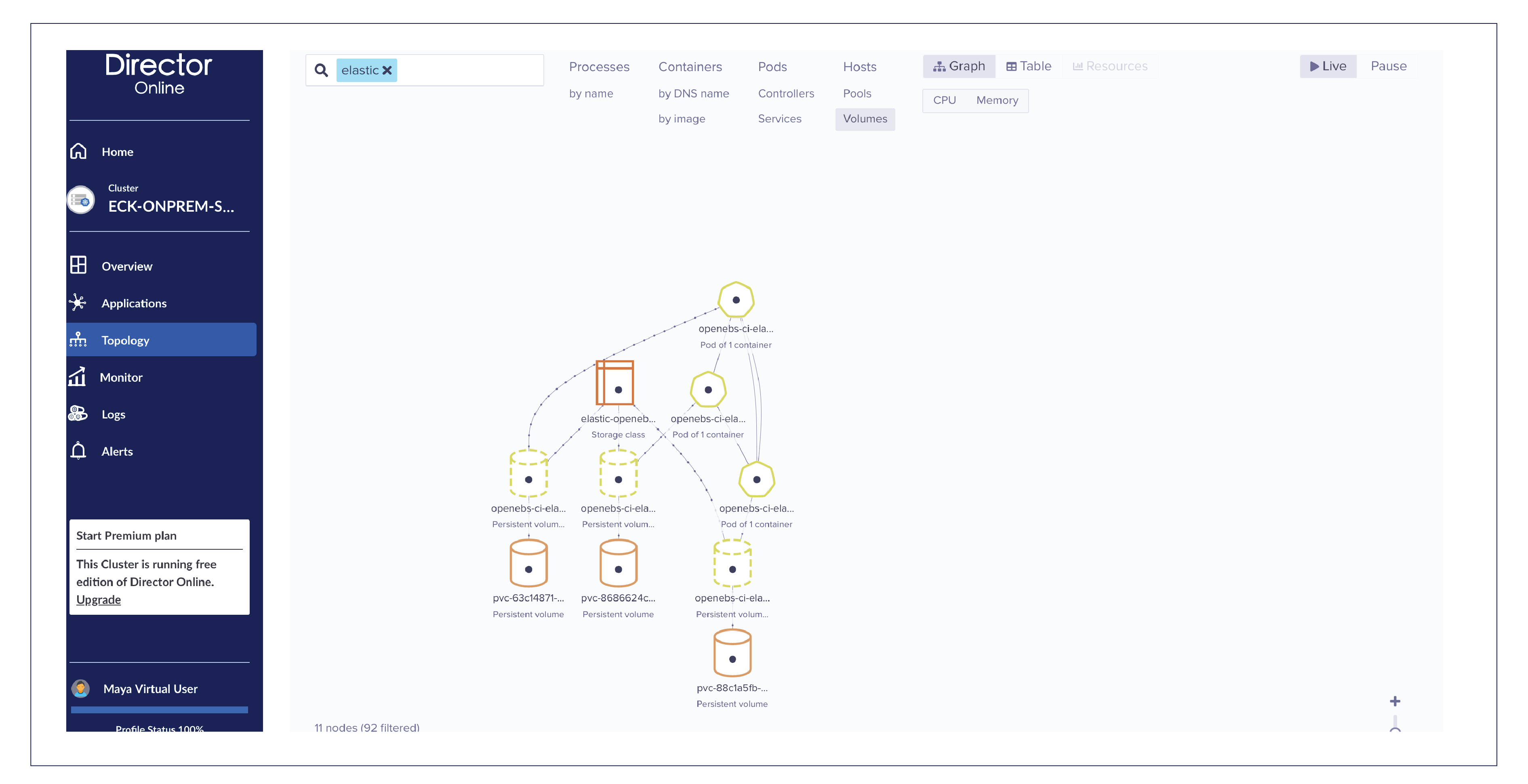

Application+PVC view

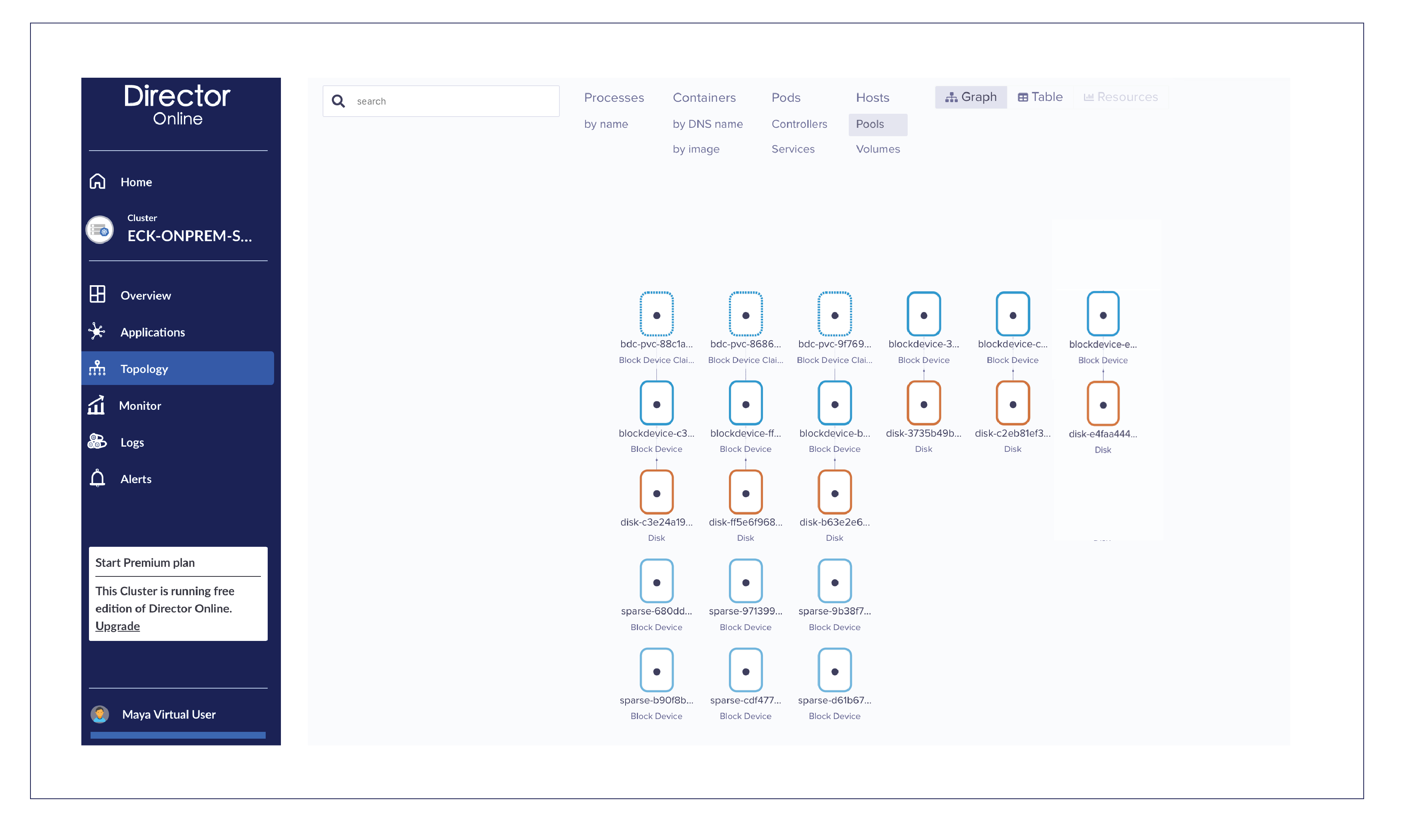

Disk / Block Device (BD) / Block Device Claim (BDC) view

The three disks are automatically claimed and attached to PVC by the OpenEBS LocalPV provisioner.

Scaling up ElasticSearch

It is done easily by changing the nodeCount in the ElasticSearch CR spec.

root@openebs-ci-master:~# kubectl edit elasticsearch openebs-ci-elastic- -

nodes:

- config:

node.data: true

node.ingest: true

node.master: true

nodeCount: 3

podTemplate:

metadata:

creationTimestamp: null

spec:

containers: null

volumeClaimTemplates:

- metadata:

creationTimestamp: null

name: elasticsearch-data

Change the nodeCount to 4.

Save it.

And Elastic operator now scales the cluster to 4 nodes.

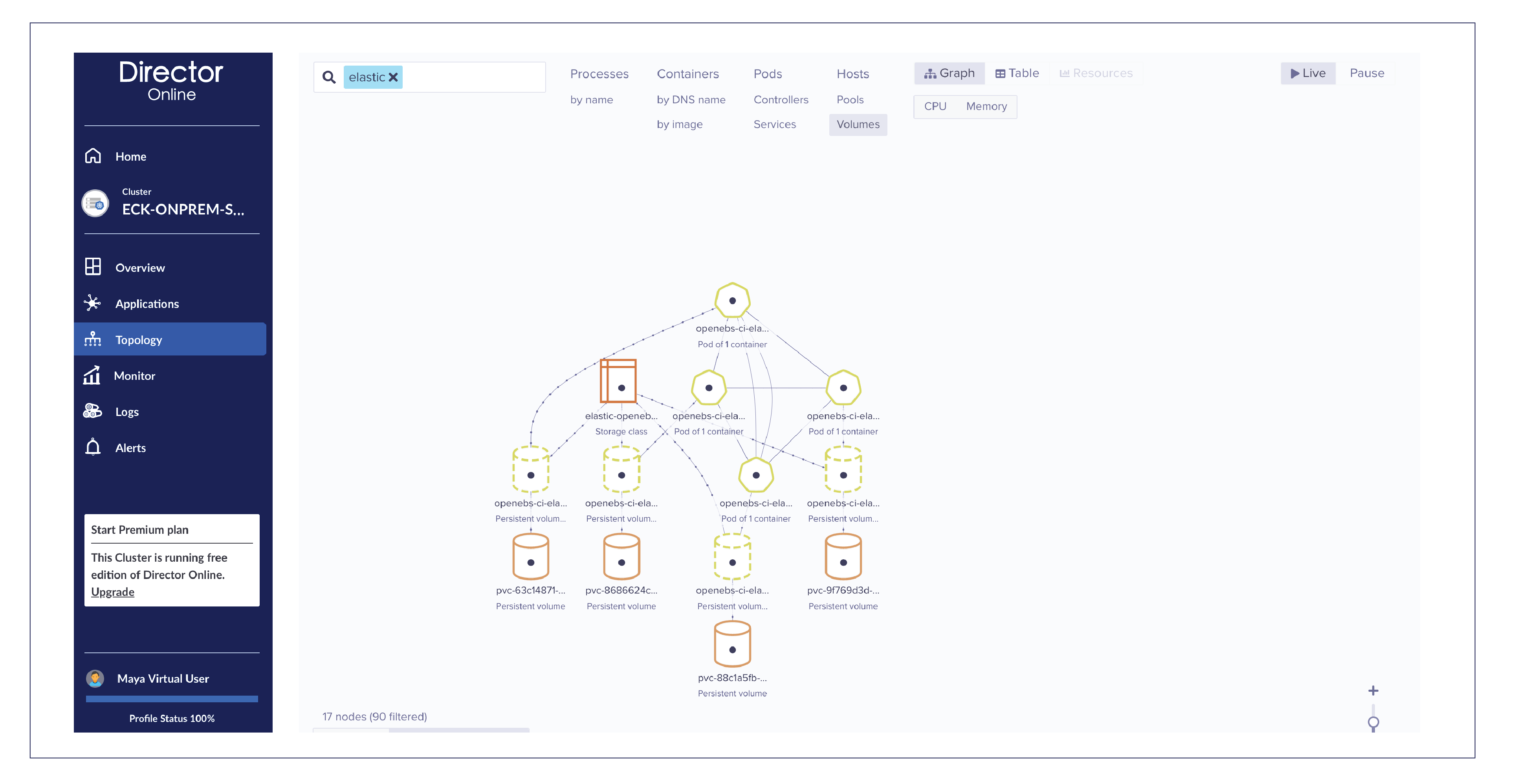

Verifying scaled up setup

Verify if the ElasticSearch cluster has 4th node and LocalPV is auto-provisioned

root@openebs-ci-master:~# kubectl get elastic

NAME HEALTH NODES VERSION PHASE AGE

elasticsearch.elasticsearch.k8s.elastic.co/openebs-ci-elastic green 4 7.2.0 Operational 13mApplication view

PVC/BD/BDC view Summary:

Summary:

We have demonstrated the following

- The new Elastic operator is the most simple way to manage ElasticSearch clusters

- Using OpenEBS LocalPV StorageClass, it is most simple to auto-provision and auto-scale the nodes with OpenEBS doing the provisioning logic behind the scene.

OpenEBS also provides the following additional services for LocalPV and ElasticSearch:

- Monitoring of disks health and usage parameters from the Director console

- Monitoring of ElasticSearch performance and status from the Director console

Getting Help:

Getting help for managing OpenEBS and ElasticSearch monitoring is easy. Sign up for free at OpenEBS Director and/or join our amazing slack community.Authors:

1. Shashank Ranjan

SRE, Data Operations

MayaData Inc

Twitter handle: @shashankranjan9

2. Uma Mukkara

COO

MayaData Inc

Twitter handle: @uma_mukkara

Game changer in Container and Storage Paradigm- MayaData gets acquired by DataCore Software

Don Williams

Don Williams

Managing Ephemeral Storage on Kubernetes with OpenEBS

Kiran Mova

Kiran Mova

Understanding Persistent Volumes and PVCs in Kubernetes & OpenEBS

Murat Karslioglu

Murat Karslioglu