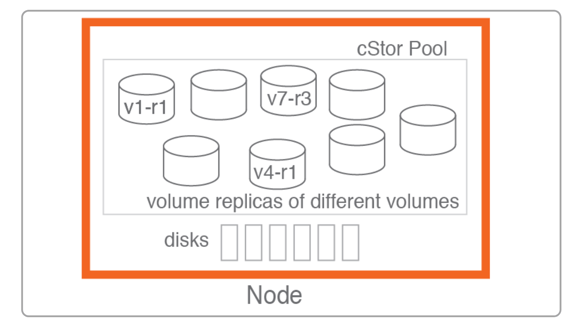

Overview: cStor Pool Provisioning

A cStor pool is local to a node in OpenEBS. A pool on a node is an aggregation of a set of disks that are attached to that node. A pool contains replicas of different volumes, with not more than one replica of a given volume. OpenEBS scheduler at the run time decides to schedule a replica in a pool according to the policy. A pool can be expanded dynamically without affecting the volumes residing in it. An advantage of this capability is the thin provisioning of cStor volumes. A cStor volume size can be much higher at the provisioning time than the actual capacity available in the pool.

A pool is an important OpenEBS component for the Kubernetes administrators in the design and planning of storage classes which are the primary interfaces to consume the persistent storage by applications.

Benefits of a cStor pool

- Aggregation of disks to increase the available capacity and/or performance on demand.

- Thin provisioning of capacity. Volumes can be allocated more capacity than what is available in the node.

- When the pool is configured in mirror mode, High Availability of storage is achieved when disk loss happens.

cStor mirror pool: Single Node Pool Provisioning

Application of following YAML provisions a single node mirror cStor pool.

Note:

- Do not forget to modify the following CSPC YAML to add your hostname label of the k8s node.

List the node to see the labels and modify accordingly.

kubectl get node --show-labelsList the block devices to add the correct block device to the cspc yaml.

kubernetes.io/hostname: "your-node" - Do not forget to modify the following CSPC YAML to add your blockdevice(block device should belong to the node where you want to provision).

- blockDevices: - blockDeviceName: "your-block-device-1" - blockDeviceName: "your-block-device-2"Note: You can add 2^n block devices in a raid group for mirror configuration.

The YAML looks like the following:apiVersion: cstor.openebs.io/v1 kind: CStorPoolCluster metadata: name: cspc-mirror-single namespace: openebs spec: pools: - nodeSelector: kubernetes.io/hostname: "worker-1" dataRaidGroups: - blockDevices: - blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" - blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" poolConfig: dataRaidGroupType: "mirror"

Steps:

- Apply the cspc yaml. (Assuming that the file name is cspc.yaml that has the above content with modified bd and node name)

kubectl apply -f cspc.yaml - Run following commands to see the status.

Note: The name of cspi is prefixed by the cspc name indicating which cspc the cspi belongs to. Please note that a cspc can have multiple cspi(s) so the cspc name prefix helps to figure out that the cspi belongs to which cspc.kubectl get cspc -n openebs kubectl get cspi -n openebs - To delete the cStor pool, run this command:

kubectl delete cspc -n openebs cspc-mirror

cStor mirror pool: 3-Node Pool Provisioning

This is similar to that of the above. We just need to add proper node and block device names to our cspc yaml.

Steps:

1. Following is a 3 node cspc mirror yaml configuration.

apiVersion: cstor.openebs.io/v1

kind: CStorPoolCluster

metadata:

name: cspc-mirror-multi

namespace: openebs

spec:

pools:

- nodeSelector:

kubernetes.io/hostname: "worker-1"

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

- blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

poolConfig:

dataRaidGroupType: "mirror"

- nodeSelector:

kubernetes.io/hostname: "worker-2"

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

- blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

poolConfig:

dataRaidGroupType: "mirror"

- nodeSelector:

kubernetes.io/hostname: "worker-3"

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

- blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

poolConfig:

dataRaidGroupType: "mirror"2. Apply the cspc yaml. ( Assuming that the file name is cspc.yaml that has the above content with modified bd and node name)

kubectl apply -f cspc.yaml3. Run the following commands to see the status.

kubectl get cspc -n openebs

kubectl get cspi -n openebs

Note: The name of cspi is prefixed by the cspc name that indicating which cspc the cspi belongs to.

4.To delete the cStor pool

kubectl delete cspc -n openebs cspc-mirror

cStor stripe pool: Single Node Pool Provisioning

Application of following YAML provisions a single node cStor pool.

Note:

i) Do not forget to modify the following CSPC YAML to add your hostname label of the k8s node.

List the node to see the labels and modify accordingly.

kubectl get node --show-labelsList the block devices to add the correct block device to the cspc yaml.

kubernetes.io/hostname: "your-node"

ii) Do not forget to modify the following CSPC YAML to add your block device(block device should belong to the node where you want to provision).

- blockDevices:

- blockDeviceName: "your-block-device"The YAML looks like the following:

apiVersion: cstor.openebs.io/v1

kind: CStorPoolCluster

metadata:

name: cspc-stripe

namespace: openebs

spec:

pools:

- nodeSelector:

kubernetes.io/hostname: "gke-cstor-demo-default-pool-3385ab41-2hkc"

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-176cda34921fdae209bdd489fe72475d"

poolConfig:

dataRaidGroupType: "stripe"Steps:

- Apply the cspc yaml. ( Assuming that the file name is cspc.yaml that has the above content with modified block device and node name)

kubectl apply -f cspc.yaml - Run the following commands to see the status.

kubectl get cspc -n openebs kubectl get cspi -n openebsNote: The name of cspi is prefixed by the cspc name indicating which cspc the cspi belongs to. Please note that a cspc can have multiple cspi(s) so the cspc name prefix helps to figure out that the cspi belongs to which cspc.

- You can have many block devices that you want in the cspc yaml. For example, see the following YAML.

apiVersion: cstor.openebs.io/v1 kind: CStorPoolCluster metadata: name: cspc-stripe namespace: openebs spec: pools: - nodeSelector: kubernetes.io/hostname: "worker-1" dataRaidGroups: - blockDevices: - blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" - blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" - blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" - blockDeviceName: "blockdevice-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" poolConfig: dataRaidGroupType: "stripe"The effective capacity of the pool here will be the sum of all the capacities of the 4 block devices. In case you have provisioned a cspc stripe pool with only one block device, you can add a block device later on also to expand the pool size.

- To delete the cStor pool

kubectl delete cspc -n openebs cspc-stripe

cStor stripe pool: 3-Node Pool Provisioning

This is similar as that of the above. We just need to add proper node and block device names to our cspc yaml.

Steps:

1. Following is a 3 node cspc stripe yaml configuration.apiVersion: cstor.openebs.io/v1

kind: CStorPoolCluster

metadata:

name: cspc-stripe

namespace: openebs

spec:

pools:

- nodeSelector:

kubernetes.io/hostname: "worker-1"

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-d1cd029f5ba4ada0db75adc8f6c88653"

poolConfig:

dataRaidGroupType: "stripe"

- nodeSelector:

kubernetes.io/hostname: "worker-2"

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-79fe8245efd25bd7e7aabdf29eae4d71"

poolConfig:

dataRaidGroupType: "stripe"

- nodeSelector:

kubernetes.io/hostname: "worker-3"

dataRaidGroups:

- blockDevices:

- blockDeviceName: "blockdevice-50761ff2c406004e68ac2920e7215679"

poolConfig:

dataRaidGroupType: "stripe"2. Apply the cspc yaml. ( Assuming that the file name is cspc.yaml that has the above content with modified block device and node name)

kubectl apply -f cspc.yaml3. Run the following commands to see the status.

kubectl get cspc -n openebs

kubectl get cspi -n openebsNote: The name of cspi is prefixed by the cspc name that indicates which cspc the cspi belongs to.

4. To delete the cStor poolkubectl delete cspc -n openebs cspc-stripeThat’s all for now! Thanks for reading. Please leave any question or feedback in the comment section below.

Game changer in Container and Storage Paradigm- MayaData gets acquired by DataCore Software

Don Williams

Don Williams

Managing Ephemeral Storage on Kubernetes with OpenEBS

Kiran Mova

Kiran Mova

Understanding Persistent Volumes and PVCs in Kubernetes & OpenEBS

Murat Karslioglu

Murat Karslioglu