This year I have attended a variety of tech events, but in terms of size, organization, and especially content — Google Next ’18 was my absolute favorite so far.

You know that you are at the right event when you see a familiar face like Mr. Hightower!

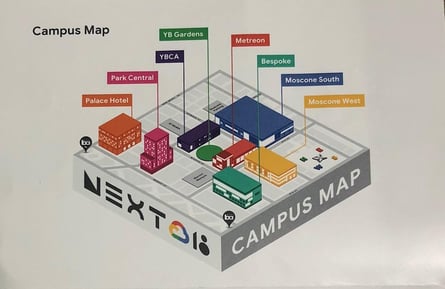

You know that you are at the right event when you see a familiar face like Mr. Hightower!Next ’18 was an excellent representation of Google as a company and the culture they promote. Sessions were primarily held in Moscone West, but the whole event was spread across Moscone West, the brand new South building, and six other buildings.

The setup was fun and casual, and the catering was of “Google Quality.” Security was taken very seriously, with metal detectors, police, K9 search dogs, and cameras throughout. Of course, there were games, fun, and even “Chrome Enterprise Grab n Go” in case you needed a loaner laptop to work on.

What I Learned at the Next ’18 Conference

First of all, a big shout out to all involved in the Istio project. It is not a surprise that we saw great advocate marketing and support for the Istio 1.0 GA release on social media last week. Istio is a big part of Google’s Cloud Services Platform (CSP) puzzle.

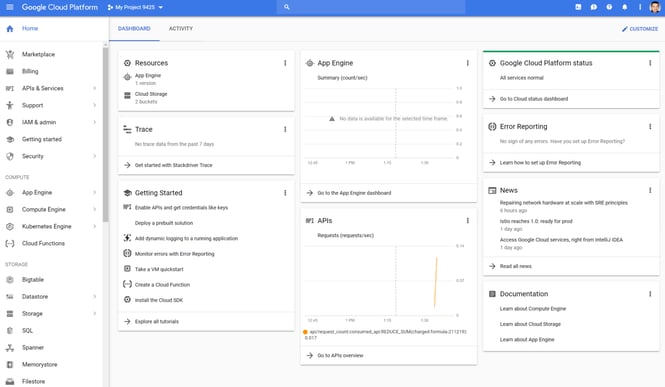

GCSP Dashboard — After deploying my first app in less than 30 seconds.

Later this year, Google is aiming to make all components of their CSP available (in some form). CSP will combine Kubernetes, GKE, GKE On Premand Istio with Google’s infrastructure, security, and operations to increase velocity, reliability and manage governance at scale.

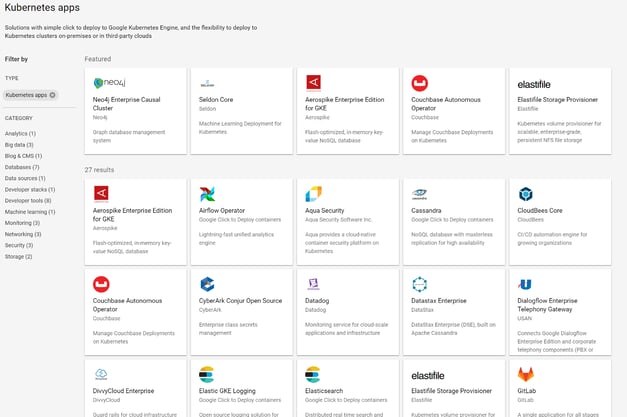

Cloud Services Platform will be available through an open ecosystem. Stackdriver Monitoring and Marketplace are the extensions to platform services. Marketplace already has 27 Kubernetes apps, including commonly used components of many environments such as Elasticsearch and Cassandra.

Users will be able to deploy a unified architecture that spans from their private cloud, using Google CSP, to Google’s public cloud. Again, the two most important pieces to this puzzle are the managed versions of the open source projects Kubernetes and Istio. Personally, the rest of it still seems to be of DIY-like quality. Knative, Cloud Build, and CD are other significant solutions that were announced at Next’18.

A New Cloud Availability Zone, this Time in your Datacenter ( Which Could be in your Garage)

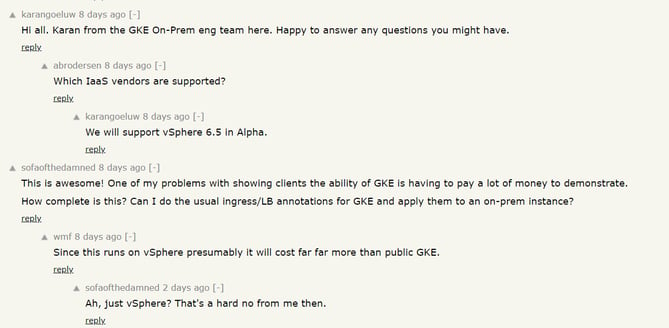

At first, GKE on-prem had me very interested. But after talking to a few Google Cloud Experts, I feel it’s still very early to be seriously considered. You can read others’ thoughts on the topic at Hacker News.

Also, GKE on-prem alpha will support vSphere 6.5 only, no bare-metal for now.

Failover from on-prem -> GKE is something Google’s team is working on. This means the GKE on-prem instance will just look like another availability zone (AZ) on a Google Cloud dashboard.

Other than vSphere dependency, the idea of being able to have an availability zone in your local data center is very compelling. It is also a very common use-case for OpenEBS since there is no cloud vendor provided. This allows a cloud-native way of spreading your cloud volumes, EBS, etc. across AZs. We see many community users running web services today using OpenEBS to enable this.

Github and Google Partners to Provide a CI/CD Platform

Cloud Build is Google’s fully managed CI/CD platform that lets you build and test applications in the cloud. Cloud Build is fully integrated with the GitHub workflow and simplifies CI processes on top of your GitHub repositories. Below are some highlighted features.

Multiple environment support allows developers to build, test, and deploy across multiple environments such as VMs, serverless, Kubernetes, or Firebase.

Native Docker support means that deployment to Kubernetes or GKE can be automated by simply importing your Docker files.

Generous free tier — 20 free build-minutes per day and up to 10 concurrent builds will likely be enough for many small projects.

Vulnerability identification performs built-in package vulnerability scanning for Ubuntu, Debian, and Alpine container images.

Build locally or in the cloud enables further edge usage or GKE on-prem.

Serverless — Here we are Again

Knative is a new open-source project started by engineers from Google, Pivotal, IBM, among others. It is a K8s-based platform used to build, deploy, and manage serverless workloads.

“The biggest concern on Knative is the dependency on Istio.”

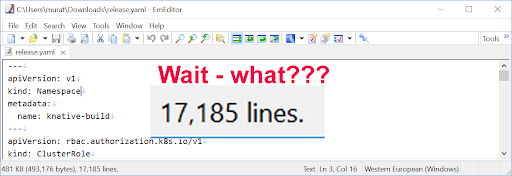

Traffic management is critical for serverless workloads. Knative is tied to Istio and therefore cannot take advantage of the broad ecosystem. This means existing external DNS services and cert-managers cannot be used. As such, Knative still needs some work and is not ready for primetime. If you don’t believe me, read the installation YAML file: the 17K lines “human readable” configuration file (release.yaml).

My Take on all of the Above — Clash of the Cloud Vendors

If you have been in IT long enough, you can easily see the emerging patterns and predict why some technologies will become more important or why others will be replaced.

“What is happening today in the industry is the battle to become the “Top-level API” vendor.”

Twenty to twenty-five years ago, hardware was still the king of IT. Brand-name servers, networks, and storage appliance vendors were ruling the datacenters. Being able to manage network routers or configure proprietary storage appliances were among the most highly-desired skills. We were talking to hardware…

Twenty years ago (in 1998), VMware was founded. VMware slowly but successfully commercialized hypervisors and virtualized IT. They became the new API to talk to, and everything else under that layer became a commodity. We were suddenly writing virtualized drivers, talking software-defined storage and networking, and the term “software-defined” was born. Because of this, traditional hardware vendors lost the market and momentum!

Twelve years ago, the AWS platform was launched. Cloud vendors became the new API that developers wanted to talk to, and now hypervisors became a commodity. CIO’s and enterprises that were sucked into the cloud started worrying about the cloud lock-in. They were afraid of events similar to the vendor lock-in or hypervisor lock-in experienced before. Technology might be new, but concerns were almost the same.

Four years ago, Kubernetes was announced and v1.0 was released in mid-2015. Finally, this offered an open-source project that threatened all previous, proprietary, vendor-managed “Top-level API.” What was previously used became a majorly adopted container orchestration technology. Although it came from Google, Kubernetes took off after it was released as open-source. So far, Red Hat has profited most from Kubernetes with their Red Hat OpenShift platform. We now see somewhat of a battle over APIs to be used in operating applications on Kubernetes, with the RedHat/CoreOS operator framework and other projects, including one supported by Google, and others such as Rook.io emerging to challenge or extend the framework.

Now, Google Container Engine (GKE), Microsoft Azure Container Service (AKS), Amazon Elastic Container Service (EKS), IBM Cloud Container Service (CCS), and Rackspace Kubernetes-as-a-Service (KaaS) are all competing in the hosted Kubernetes space (additional new vendors expected here).

There is enough room to grow in the self-hosted Kubernetes space, with GKE on-prem being validation of this from Google.

Hardware>Virtualization>Cloud>Containers>Serverless???

Many of us see Serverless as the logical next step, but it may be too granular to support larger adoption. Current limitations validate these claims and have shown that it does not scale well for intense workloads.

One size doesn’t fit all, and there are still traditional use cases that even run on bare-metal and VMs. The same might be true for Serverless. It is not for every workload, and modernizing existing workloads will take time. However, we will see who can become the leader of the next “Top-level API.”

What do you think? Who is going to win the clash of the titans? What did you think about Next’18 and Google’s strategy?

Thanks for reading and feel free to leave any feedback!

Some Next’18 Moments

Check-out these popular hashtags:

#next18 #googlenext18 #knative #kubernetes #istiomesh

Also check out the keynotes:

Keynotes from last week’s #GoogleNext18:

http://g.co/nextonair

This article was first published on Aug 2, 2018 on OpenEBS's Medium Account

.jpg?width=300&name=unnamed%20(1).jpg)

.jpg?width=300&name=unnamed%20(3).jpg)

Inspired by SCale17x: A Short History of Data Storage

Evan Powell

Evan Powell