For most Open Source projects based on Kubernetes, the period right after the KubeCon can be quite overwhelming. LitmusChaos, too, was demonstrated and discussed during the conference and believe me, it does take some time to assimilate such a considerable amount of feedback and then actually prioritize and execute on it. While the broader Kubernetes community members we met readily approved of the cloud native approach that we’ve adopted, what we did realize was an obvious need for:

- Application-specific chaos experiments with better exit checks

- Improved chaos execution capabilities in terms of handling evolving experiment schema, better execution performance and aid with observability around chaos, etc.

- Good user-docs to aid faster and seamless self-adoption

In lieu of this, the 0.9 release charter focused on improving the generic and Kafka experiments, while laying the base for further enhancements around experiment execution with a new chaos-runner and introducing user guides for each application. While we continue to polish the stone on these aspects in subsequent releases, let’s take a look at what changes have gone into Litmus 0.9.

Improvements to the Generic Experiment Suite

While the litmus chaos experiments for OpenEBS continue to be the most adopted ones, the last few months have seen increased use of the Generic experiment suite, especially amongst those introducing chaos engineering practices into their organizations’ app development culture.

Here too, the application/pod level experiments are seen to be preferred over the infra/platform-specific experiments for their lower blast radius. Here are a couple of enhancements made to make these experiments more meaningful to practitioners while also increasing their interoperability (in terms of base images).

Support for Multi/Group kill of Kubernetes Pods

One of the interesting use-cases where the pod-delete experiment is being used is during performance benchmark runs. While this experiment tests the inherent resiliency (or, shall we say deployment sanity) of a containerized application, it is also instructive to understand the impact of the loss of application replicas in terms of performance (latency caused, bandwidth dip). This is especially true in the case of stream processing applications, such as Kafka. One of the adopters of LitmusChaos has been using the Locust workload generation software to generate load on a multi-broker Kafka setup and visualize the impact of pod loss on the performance metrics. In such a setup, it is useful to be able to perform group-kill of the app replicas pods. Now, this is possible via an ENV “KILL_COUNT” specified in the chaosEngine spec to kill the desired number of pods (sharing the same label in a given namespace) at the same time.

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: nginx-chaos

namespace: default

spec:

appinfo:

appns: default

applabel: 'app=nginx'

appkind: deployment

chaosServiceAccount: nginx-sa

monitoring: false

jobCleanUpPolicy: delete

experiments:

- name: pod-delete

spec:

components:

# set chaos duration (in sec) as desired

- name: TOTAL_CHAOS_DURATION

value: '30'

# set chaos interval (in sec) as desired

- name: CHAOS_INTERVAL

value: '10'

# pod failures without '--force' & default terminationGracePeriodSeconds

- name: KILL_COUNT

Value: '3'

- name: FORCE

value: "false"

Support Network Chaos on Kubernetes Pods Irrespective of “tc”

LitmusChaos uses the awesome Pumba integration to inject network latency on an application pod. However, Pumba internally makes use of the Linux traffic shaper tool tc with the netem module to create the necessary egress traffic rules for packet loss, latency injection, etc. There are many applications that use base images that do not support/contain the tc tool, which hindered Litmus interoperability with those applications. While Pumba does provide a helper/supporting alpine container with the iproute2 package (that installs tc) that it uses with NET_ADMIN capability to inject chaos on the target container’s network stack, there were some challenges in using it due to the model in which Pumba was launched in the LItmusChaos experiment: as a daemonset in dry-run mode with a custom command launched via `kubectl exec` after identification of the co-located Pumba pod. This experiment has now been enhanced to use the latest stable Pumba release (0.6.5) where a Pumba job (which uses the iproute2 container) is launched on the identified node housing the target container. With this change, all application pods can be subjected to network chaos experiments irrespective of whether they have tc installed.

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: nginx-chaos

namespace: default

spec:

appinfo:

appns: default

applabel: 'app=nginx'

appkind: deployment

chaosServiceAccount: nginx-sa

monitoring: false

jobCleanUpPolicy: delete

experiments:

- name: container-kill

spec:

Components:

# specify the name of the container to be killed

- name: TARGET_CONTAINER

value: 'nginx'

- name: LIB

Value: 'Pumba'

- name: LIB_IMAGE

value: '0.6.5'

Support for injecting config/application info via ConfigMaps and Secrets

The Chaos Experiment schema has been enhanced to support the injection of application/platform-specific information as configmap and secret (volumes) to facilitate better post-checks and out-of-band access to infrastructure. While ENV variables continue to be the order of the day when it comes to individual chaos tunables, the general approach to pass multi-value inputs or config-specific information has always been via configmaps and secrets. As of 0.9, you can provide the configMap/secret name and desired mountPath in the Chaos Experiment CR, with the precondition being that these are created by the user before triggering the experiment. The chaos-runner does support validation of these volumes in terms of acceptable values as well as their presence in the cluster, failing which the experiment execution is aborted.

env:

- name: ANSIBLE_STDOUT_CALLBACK

value: default

- name: OPENEBS_NS

value: 'openebs'

- name: APP_PVC

value: ''

- name: LIVENESS_APP_LABEL

value: ''

- name: LIVENESS_APP_NAMESPACE

value: ''

- name: CHAOS_ITERATIONS

value: '2'

- name: DATA_PERSISTENCE

value: ''

labels:

name: openebs-pool-pod-failure

configmaps:

- name: openebs-pool-pod-failure

mountPath: /mnt

Improvements to the Kafka Experiment Suite

The Kafka Chaos experiments are amongst the first few application-specific chaos experiments that the Litmus community has been working on, and we would like to incorporate best practices/methodologies into these that can be applied to other applications going forward. Some of the enhancements made to this suite are provided below:

Improved Health Check for Kafka Broker Cluster

While the liveness-message stream-based exit checks already introduced a Kafka-specific element to the chaos, it remains an optional experiment tunable, with users choosing to set up their own Producer/Consumer infrastructure. In such cases, there is a need to use alternate means to verify the health of the Kafka brokers as against merely checking if the pods are alive. In 0.9, the Kafka cluster health check has been enhanced via a ZooKeeper (ZK) check.

zkCli.sh -server <zookeeper-service>:<zookeeper-port>/<kafka-instance> ls/brokers/ids

The ZooKeeper maintains all the Kafka cluster metadata (while individual brokers keep a watch on the ZK for notifications). A lossy/terminated broker would mean its ID is removed from the list maintained in ZK.

Introduce Network Chaos Experiments for Kafka

With the enhancements made to the Pumba -based network chaoslib & the improved health checks, the Kafka chaos suite now includes network chaos experiments to perform:

- Kafka Broker Network Latency

- Kafka Broker Pod Network Loss

Introduce an improved Chaos-Runner, written in Go

The chaos Runner is an operational bridge between the Chaos-Operator and the LitmusChaos experiment jobs. Users will know that this is the piece that reads data off the experiment spec (and the overriding tunables from the ChaosEngine) and runs the eventual experiment job in addition to performing the cleanup and status patch operations. As can be seen, this is a crucial piece in the Chaos Orchestration framework along with the operator (and chaos-exporter).

It is imperative that the chaos-runner is performant (to trigger experiments quickly despite increased data injected into the experiment), is scalable (to support various enhancements such as resource validation, asynchronous and random experiment execution), aids observability (contextual logs, providing the right hooks to construct an audit trail wrt experiments, etc.), and allows for fine-grained control of chaos execution (such as the ability to abort experiments, with immediate cleanup of chaos residue).

The 0.9 release introduces an alpha version of a new chaos-runner, rewritten in Golang (the current one uses ansible) that lays the base for the capabilities mentioned above that results in making the chaos-execution more resilient. To try it out, provide the runner configuration as shown below:

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine

namespace: litmus

spec:

appinfo:

appkind: deployment

applabel: app=nginx

appns: litmus

chaosServiceAccount: litmus

components:

runner:

type: "go"

image: "litmuschaos/chaos-executor:ci"

experiments:

- name: pod-delete

spec:

components: null

monitoring: false

Docs, Docs & More Docs!

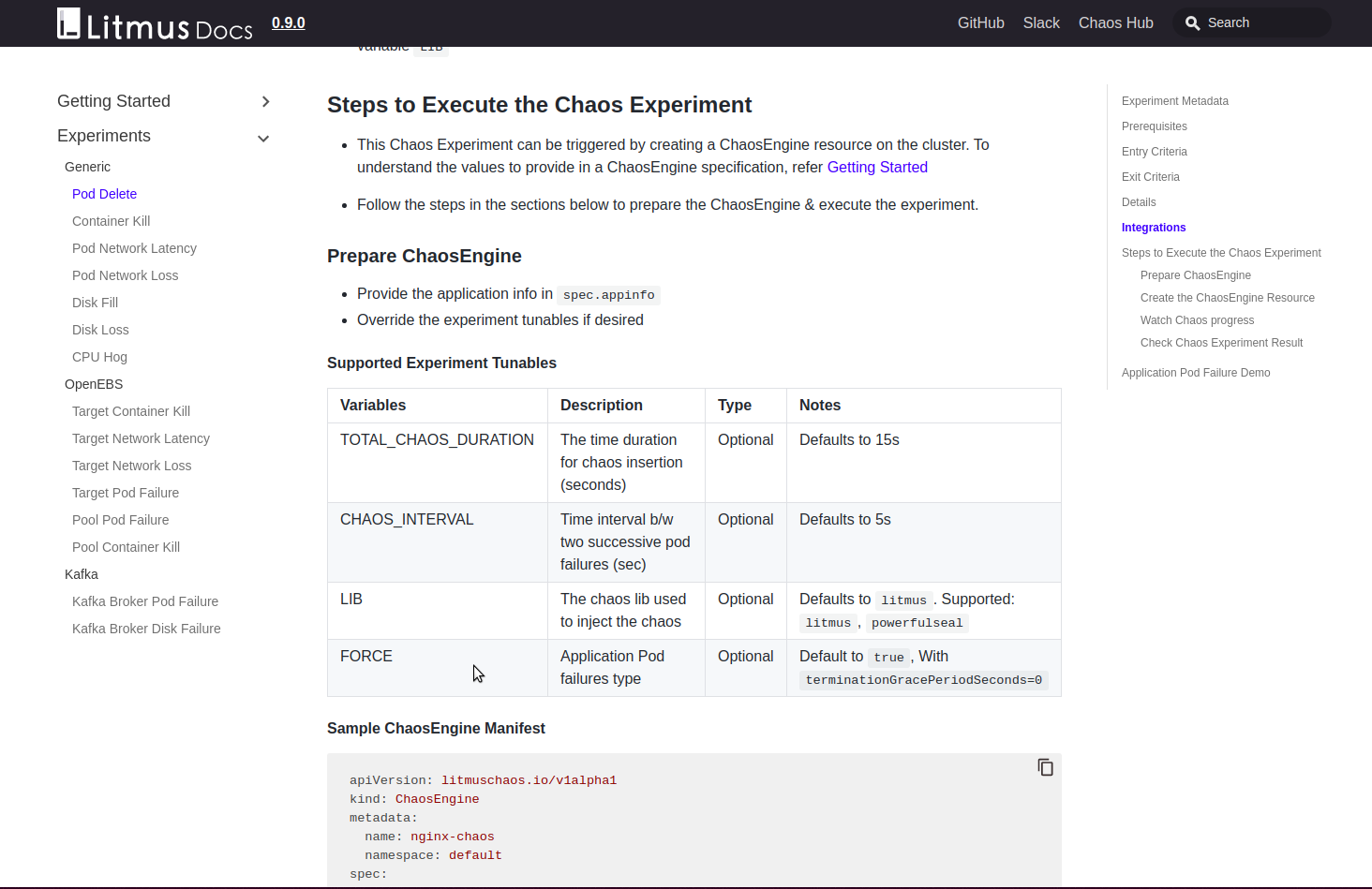

The primary user-facing component and probably the most instrumental piece in determining usage of any open-source project: Documentation. As can be seen, the LitmusChaos community is making efforts in every release to improve the standard of the docs. Litmus 0.9 features user-guides for all experiments currently supported, while making explicit the mandatory and optional experiment tunables. They are also accompanied by notes, where applicable and a sample ChaosEngine specification to help users get started with the experiments. And yes, we always welcome feedback (and, of course, PRs) to help improve our docs.

Conclusion

With each release, the intent is to get closer to creating a critical mass of valuable Chaos experiments that should help your team practice end-to-end Chaos engineering on your deployment environments, while also improving the infrastructure around chaos itself. Some of the planned items for the forthcoming release include:

- Support schema validation for LitmusChaos CRDs via OpenAPI specification

- Increased BDD/e2e test coverage for the Chaos Experiments

- Upgrade the Go Chaos Runner to Beta quality

- (Application level) Resource exhaustion Chaos Experiments

With each release, we also add newer community members with remarkable contributions that help the project grow. Special thanks to Laura Henning (@laumiH) for all the Github issues, PRs & really useful feedback on the project. Huge shoutout to @bhaumik for the continuous feedback that helped us improve the Kafka experiments. Do keep your contribution coming!

Managing Ephemeral Storage on Kubernetes with OpenEBS

Kiran Mova

Kiran Mova

Why OpenEBS 3.0 for Kubernetes and Storage?

Kiran Mova

Kiran Mova

Deploy PostgreSQL On Kubernetes Using OpenEBS LocalPV

Murat Karslioglu

Murat Karslioglu