About a month ago, D2iQ (known as Mesosphere at that time) announced the upcoming availability of two new products for the Kubernetes community. More on that announcement here.

The first is Konvoy, a managed Kubernetes platform. Konvoy provides a standalone production-ready Kubernetes cluster with best-in-class components (CNCF projects) for operation and lifecycle management. The solution enables developer agility and faster time to market for new Kubernetes deployments.

The second solution is Kommander, which will enable better visibility and control across multiple Kubernetes clusters.Both solutions are expected to be GAed sometime around late Q3 or early Q4 2019.

Both Konvoy and Kommander are complementary to KUDO, an open-source project created by D2iQ in December that provides a declarative approach to building production-grade Kubernetes operators covering the entire application lifecycle.

In this blog post, I provide step-by-step instructions on how to quickly configure a stateful-workload-ready Kubernetes cluster using Konvoy and OpenEBS.

.png?width=2400&name=Untitled%20design%20(9).png) Getting Konvoy up and running easily with OpenEBS

Getting Konvoy up and running easily with OpenEBS

Now, let’s take a look at the requirements.

Prerequisites

Minimum requirements for a multi-node cluster:

Hardware

No bare metal nodes were harmed in the making of this blog.

I will install Konvoy on AWS. Default settings will create 3x t3.large master and 4x t3.x large worker instances (total 7).

Note: Separately, you can also install a demo environment on your laptop using Docker running konvoy provision --provisioner=docker parameter, but this will not be covered in this blog this time.

Software

- CentOS 7

- jq

- AWS Command Line Interface (AWS CLI)

- kubectl v1.15.0 or newer

- Konvoy

- OpenEBS

- Elasticsearch, Kibana, Prometheus, Velero (or any other stateful workload)

Getting Prerequisites Ready (2-5 minutes)

As I mentioned above, installation requires an AWS account and an AWS user with a policy that has permission to use the related services.

You will also need awscli and kubectl installed before we begin.

I will be using Ubuntu 18.04 LTS as my workstation host. If you already have prerequisites ready you can jump into Installing Konvoy section.

- Install awscli on your workstation:

$ sudo apt-get update && sudo apt-get install awscli jq -y - Configure the AWS CLI to use your access key ID and secret access key:

$ aws configure - Download and install the Kubernetes command-line tool kubectl:

$ curl -LO

https://github.com/kubernetes/kops/releases/download/$(curl -s

https://api.github.com/repos/kubernetes/kops/releases/latest |

grep tag_name | cut -d '"' -f 4)/kops-linux-amd64

$ chmod +x kops-linux-amd64

$ sudo mv kops-linux-amd64 /usr/local/bin/kops - Confirm kubectl version:

$ kubectl version --short

Client Version: v1.15.0

Server Version: v1.15.0 - You need to subscribe to CentOS and accept the agreement on AWS Marketplace to be able to use the images, follow the link here and subscribe to CentOS 7 (x86_64) – with Updates HVM.

Install D2iQ Konvoy (2-5 minutes)

- Download and extract Konvoy on your workstation. The filename should be something similar to konvoy_vx.x.x_linux.tar.bz2 :

$ tar -xf konvoy_v0.6.0_linux.tar.bz2 - Move files under your user PATH:

$ sudo mv ./konvoy_v0.6.0/* /usr/local/bin/ - That’s it. The first time you call Konvoy it will pull images from docker registry. Now check the version:

$ konvoy --version

{

"Version": "v0.6.0",

"BuildDate": "Thu Jul 25 04:55:40 UTC 2019" - Hold on before you try the konvoy up command. That would create nodes with the default config, but we need to get all components that require persistent storage also deployed on cloud native storage.

Deploying a Konvoy cluster (15-20 minutes)

Let’s first get up stateless components that don’t require persistent storage.

- Create the cluster.yaml file:

$ konvoy init - Edit the file. An example file below:

$ nano cluster.yaml

---

kind: ClusterProvisioner

apiVersion: konvoy.mesosphere.io/v1alpha1

metadata:

name: ubuntu

creationTimestamp: "2019-07-31T23:04:29.030542867Z"

spec:

provider: aws

aws:

region: us-west-2

availabilityZones:

- us-west-2c

tags:

owner: ubuntu

adminCIDRBlocks:

- 0.0.0.0/0

nodePools:

- name: worker

count: 4

machine:rootVolumeSize: 80

rootVolumeType: gp2

imagefsVolumeEnabled: true

imagefsVolumeSize: 160

imagefsVolumeType: gp2

type: t3.xlarge

- name: control-plane

controlPlane: true

count: 3

machine:

rootVolumeSize: 80

rootVolumeType: gp2

imagefsVolumeEnabled: true

imagefsVolumeSize: 160

imagefsVolumeType: gp2

type: t3.large

- name: bastion

bastion: true

count: 0

machine:

rootVolumeSize: 10

rootVolumeType: gp2

type: t3.small

sshCredentials:

user: centos

publicKeyFile: ubuntu-ssh.pub

privateKeyFile: ubuntu-ssh.pem

version: v0.6.0 - Edit the file, find the addons sections disable all AWS EBS storage options and as well as stateful components.

...

addons:

configVersion: v0.0.47

addonsList:

- name: awsebscsiprovisioner

enabled: false

- name: awsebsprovisioner

enabled: false

- name: dashboard

enabled: true

- name: dex

enabled: true

- name: dex-k8s-authenticator

enabled: true

- name: elasticsearch

enabled: false

- name: elasticsearchexporter

enabled: false

- name: fluentbit

enabled: false

- name: helm

enabled: true

- name: kibana

enabled: false

- name: kommander

enabled: true

- name: konvoy-ui

enabled: true

- name: localvolumeprovisioner

enabled: false

- name: opsportal

enabled: true

- name: prometheus

enabled: false

- name: prometheusadapter

enabled: false

- name: traefik

enabled: true

- name: traefik-forward-auth

enabled: true

- name: velero

enabled: false

version: v0.6.0 - Now provision the Konvoy cluster. This step will take around 10 minutes to complete:

$ konvoy up - Konvoy will bring up CentOS images, configure 3 master and 4 worker nodes. It will also deploy helm, dashboard, opsportal, traefik, kommander, dex, konvoy-ui, traefik-forward-auth, and dex components. After a successful installation, you should see a message similar to the below:

If the cluster was recently created, the dashboard and services may take a few minutes to be accessible.Kubernetes cluster and addons deployed successfully!

Run `konvoy apply kubeconfig` to update kubectl credentials. Navigate to the URL below to access various services running in the cluster.

https://a67190d830fd64957884d49fd036ea78-841155633.us-west-2.elb.amazonaws.com/ops/landing

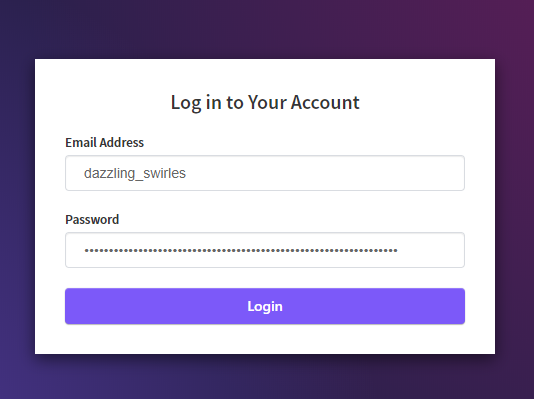

And login using the credentials below.

Username: dazzling_swirles

Password: DGwwyTFNCt6h2JSxTfqzD8PUWSLo1qPLCZJ82kQmMmaNctodWa3gd8W0O2SPFC - Configure kubectl:

$ konvoy apply kubeconfig - Confirm your Kubernetes cluster is ready:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-0-129-203.us-west-2.compute.internal Ready <none> 4m49s v1.15.0

ip-10-0-129-216.us-west-2.compute.internal Ready <none> 4m49s v1.15.0

ip-10-0-130-203.us-west-2.compute.internal Ready <none> 4m49s v1.15.0

ip-10-0-131-148.us-west-2.compute.internal Ready <none> 4m49s v1.15.0

ip-10-0-194-238.us-west-2.compute.internal Ready master 7m8s v1.15.0

ip-10-0-194-76.us-west-2.compute.internal Ready master 5m27s v1.15.0

ip-10-0-195-6.us-west-2.compute.internal Ready master 6m31s v1.15.0 - Looks good to me…

Setting up Konvoy to use OpenEBS as the default storage provider (15-20 minutes)

Now, Let’s get up stateless components on OpenEBS persistent storage using cStor engine.

- Get your cluster name either from the prefix of instance name in your AWS console or use the command below:

$ aws --region=us-west-2 ec2 describe-instances | grep ubuntu-

...

"Value": "ubuntu-bb0c" - Get the cluster name from the output above. In my case, my CLUSTER name is ubuntu-bb0c.

- Replace the values for the first three variables with your settings and set the variables to be used in our script next:

export CLUSTER=ubuntu-bb0c # name of your cluster,

export REGION=us-west-2 # region-name

export KEY_FILE=ubuntu-ssh.pem # path to private key

fileIPS=$(aws --region=$REGION ec2 describe-instances | jq

--raw-output ".Reservations[].Instances[] | select((.Tags |

length) > 0) | select(.Tags[].Value | test("$CLUSTER-worker"))

| select(.State.Name | test("running")) | [.PublicIpAddress] |

join(" ")") - Install the iSCSI client on all worker nodes using the script below:

for ip in $IPS; do

echo $ip

ssh -o StrictHostKeyChecking=no -i $KEY_FILE centos@$ip sudo

yum install iscsi-initiator-utils -y

ssh -o StrictHostKeyChecking=no -i $KEY_FILE centos@$ip sudo

systemctl enable iscsid

ssh -o StrictHostKeyChecking=no -i $KEY_FILE centos@$ip sudo

systemctl start iscsid

done - Set the additional disk size to be included in the cStor storage pool later. In our example 100GB.

export DISK_SIZE=200 - Create and attach new disks to the worker nodes:

aws --region=$REGION ec2 describe-instances | jq --raw-output

".Reservations[].Instances[] | select((.Tags | length) > 0) | select(.Tags[].Value | test("$CLUSTER-worker")) | select(.State.Name | test("running")) | [.InstanceId, .Placement.AvailabilityZone] | "\(.[0]) \(.[1])"" | while read

instance zone; do

echo $instance $zone

volume=$(aws --region=$REGION ec2 create-volume --size=$DISK_SIZE --volume-type gp2 --availability-zone=$zone --tag-specifications="ResourceType=volume,Tags=[{Key=string,Value=$CLUSTER}, {Key=owner,Value=michaelbeisiegel}]" | jq --raw-output .VolumeId)

sleep 10

aws --region=$REGION ec2 attach-volume --device=/dev/xvdc --instance-id=$instance --volume-id=$volume

done - To simplify block device selection, I would exclude the system devices from NDM. Download the latest openebs-operator-1.x.x YAML file.

wget

https://openebs.github.io/charts/openebs-operator-1.1.0.yaml - Edit the file and add the two devices /dev/nvme0n1,/dev/nvme1n1 at the end of exclude: like under filterconfigs:

…

filterconfigs:

– key: vendor-filter

name: vendor filter

state: true

include: “”

exclude: “CLOUDBYT,OpenEBS”

– key: path-filter

name: path filter

state: true

include: “”

exclude: “loop,/dev/fd0,/dev/sr0,/dev/ram,/dev/dm-,/dev/md,/dev/nvme0n1,/dev/nvme1n1”

… - Now, install OpenEBS.

kubectl apply -f openebs-operator-1.1.0.yaml - Get the list of block devices we have mounted to our Amazon Elastic Compute Cloud EC2 worker nodes.

$ kubectl get blockdevices -n openebs

NAME SIZE CLAIMSTATE STATUS AGE

blockdevice-16b54eaf38720b5448005fbb3b2e803e 107374182400 Unclaimed Active 23s

blockdevice-2403405be0d70c38b08ea6e29c5b0a2f 107374182400 Unclaimed Active 14s

blockdevice-6d9b524c340bcb4dcecb69358054ec31 107374182400 Unclaimed Active 22s

blockdevice-ecafd0c075fb4776f78bcde26ee2295f 107374182400 Unclaimed Active 23s - Create a StoragePoolClaim using the list of block devices from the output above. Make sure that you have replaced the list blockDeviceList with yours before you run it:

cat <<EOF | kubectl apply -f -

kind: StoragePoolClaim

apiVersion: openebs.io/v1alpha1

metadata:

name: cstor-ebs-disk-pool

annotations:

cas.openebs.io/config: |

- name: PoolResourceRequests

value: |-

memory: 2Gi

- name: PoolResourceLimits

value: |-

memory: 4Gi

spec:

name: cstor-disk-pool

type: disk

poolSpec:

poolType: striped

blockDevices:

blockDeviceList:

- blockdevice-16b54eaf38720b5448005fbb3b2e803e

- blockdevice-2403405be0d70c38b08ea6e29c5b0a2f

- blockdevice-6d9b524c340bcb4dcecb69358054ec31

- blockdevice-ecafd0c075fb4776f78bcde26ee2295f

EOF - Create a default Storage Class using the new Storage Pool Claim.

cat <<EOF | kubectl apply -f -

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: openebs-cstor-default

annotations:

openebs.io/cas-type: cstor

cas.openebs.io/config: |

- name: StoragePoolClaim

value: "cstor-ebs-disk-pool"

- name: ReplicaCount

value: "3"

storageclass.kubernetes.io/is-default-class: 'true'

provisioner: openebs.io/provisioner-iscsi

EOF - Confirm that it is set as default:

$ kubectl get sc

NAME PROVISIONER AGE

openebs-cstor-default (default) openebs.io/provisioner-iscsi 4s

openebs-device openebs.io/local 26m

openebs-hostpath openebs.io/local 26m

openebs-jiva-default openebs.io/provisioner-iscsi 26m

openebs-snapshot-promoter volumesnapshot.external-storage.k8s.io/snapshot-promoter 26m - Edit the cluster.yaml file, find the addons sections enable all stateful workload components. That’s pretty much everything except awsebscsiprovisioner, awsebsprovisioner and localvolumeprovisioner:

addons:

configVersion: v0.0.47

addonsList:

- name: awsebscsiprovisioner

enabled: false

- name: awsebsprovisioner

enabled: false

- name: dashboard

enabled: true

- name: dex

enabled: true

- name: dex-k8s-authenticator

enabled: true

- name: elasticsearch

enabled: true

- name: elasticsearchexporter

enabled: true

- name: fluentbit

enabled: true

- name: helm

enabled: true

- name: kibana

enabled: true

- name: kommander

enabled: true

- name: konvoy-ui

enabled: true

- name: localvolumeprovisioner

enabled: false

- name: opsportal

enabled: true

- name: prometheus

enabled: true

- name: prometheusadapter

enabled: true

- name: traefik

enabled: true

- name: traefik-forward-auth

enabled: true

- name: velero

enabled: true

version: v0.6.0 - Now update the Konvoy cluster. This step will be faster this time and it will around 5 minutes to complete:

$ konvoy up - After a successful update, you should see a message similar to the below:

STAGE [Deploying Enabled Addons]

helm [OK]

dashboard [OK]

opsportal [OK]

traefik [OK]

fluentbit [OK]

kommander [OK]

prometheus [OK]

elasticsearch [OK]

traefik-forward-auth [OK]

konvoy-ui [OK]

dex [OK]

dex-k8s-authenticator [OK]

elasticsearchexporter [OK]

kibana [OK]

prometheusadapter [OK]

velero [OK]STAGE [Removing Disabled Addons]

awsebscsiprovisioner [OK]

Kubernetes cluster and addons deployed successfully!Run `konvoy apply kubeconfig` to update kubectl credentials.Navigate to the URL below to access various services running in the cluster.

https://a67190d830fd64957884d49fd036ea78-841155633.us-west-2.elb.amazonaws.com/ops/landing

And login using the credentials below.

Username: dazzling_swirles

Password: DGwwyTFNCt6h2JSxTfqzD8PUWSLo1qPLCZJ82kQmMmaNctodWa3gd8W0O2SPFCIc

If the cluster was recently created, the dashboard and services may take a few minutes to be accessible. - There should be around 10 persistent volumes created on OpenEBS. You can confirm that all stateful workloads got a PV using the openebs-cstor-default storage class.

$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-0b6031e2-38c7-49e0-98b6-94ba9decda19 4Gi RWO Delete Bound kubeaddons/data-elasticsearch-kubeaddons-master-2 openebs-cstor-default 18m

pvc-0fa74e6d-978a-47e6-961d-9ff6601702e6 10Gi RWO Delete Bound velero/data-minio-3 openebs-cstor-default 15m

pvc-18cfe51f-0a2c-409a-ba89-dbd89ad1c9f5 10Gi RWO Delete Bound velero/data-minio-2 openebs-cstor-default 15m

pvc-442dad69-56e5-44f1-8c43-cc90028c1d6d 10Gi RWO Delete Bound velero/data-minio-1 openebs-cstor-default 16m

pvc-69b30e82-6e47-4061-9360-54591a3cb593 30Gi RWO Delete Bound kubeaddons/data-elasticsearch-kubeaddons-data-0 openebs-cstor-default 21m

pvc-6d837a95-8607-4956-b3f5-bce4e42f5165 10Gi RWO Delete Bound velero/data-minio-0 openebs-cstor-default 16m

pvc-83498bcb-6f7e-45b8-8d25-d6f7af46af9a 4Gi RWO Delete Bound kubeaddons/data-elasticsearch-kubeaddons-master-1 openebs-cstor-default 19m

pvc-9df994f7-38af-4cb2-b0fc-2d314245a965 50Gi RWO Delete Bound kubeaddons/db-prometheus-prometheus-kubeaddons-prom-prometheus-0 openebs-cstor-default 21m

pvc-f1c0e0ad-f03e-4212-81b5-f4b9e4b70f10 30Gi RWO Delete Bound kubeaddons/data-elasticsearch-kubeaddons-data-1 openebs-cstor-default 19m

pvc-f92ca2d2-b808-4dc5-b148-362b93ef6d20 4Gi RWO Delete Bound kubeaddons/data-elasticsearch-kubeaddons-master-0 openebs-cstor-default 21m - Open the link from the output above:

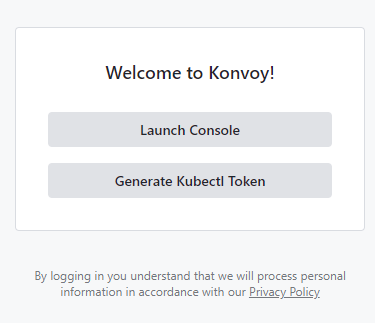

Welcome to Konvoy! - Log in using the username and password from the output:

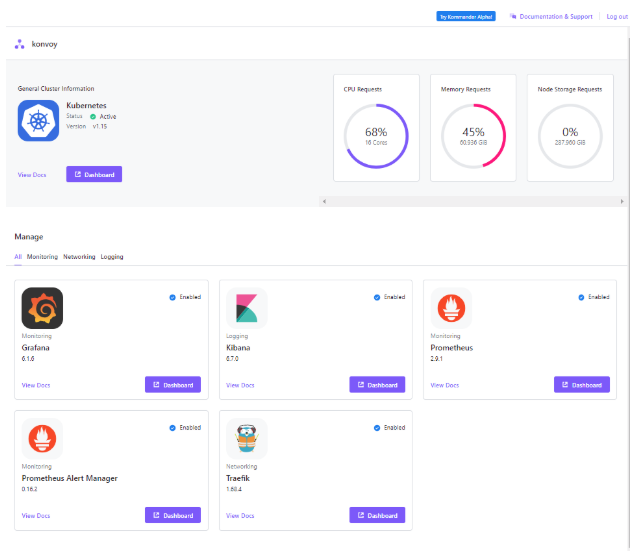

Log in to your Account - Finally, you have all components up and running and quick access to the link for monitoring and logging dashboards via Konvoy Dashboard.

Konvoy Dashboard - Here is the list of other Konvoy commands you can use at the moment (as of 0.6.0) below:

Available Commands:

apply Updates certain configuration in the existing kubeconfig file

check Run checks on the health of the cluster

completion Output shell completion code for the specified shell (bash or zsh)

deploy Deploy a fully functioning Kubernetes cluster and addons

diagnose Creates a diagnostics bundle of the cluster. Logs are included for the last 48h.

down Destroy the Kubernetes cluster

get Get cluster related information

help Help about any command

init Create the provision and deploy configuration with default values

provision Provision the nodes according to the provided Terraform variables file

reset Remove any modifications to the nodes made by the installer, and cleanup file artifacts

up Run provision, and deploy (kubernetes, container-networking, and addons) to create or update a Kubernetes cluster reflecting the provided configuration and inventory files

version Version for konvoy - Have fun!

If you have any questions, feedback or any topic that is not covered here feel free to comment on our blog or contact me via Twitter @muratkarslioglu.

To be continued…

Managing Ephemeral Storage on Kubernetes with OpenEBS

Kiran Mova

Kiran Mova

Understanding Persistent Volumes and PVCs in Kubernetes & OpenEBS

Murat Karslioglu

Murat Karslioglu

Why OpenEBS 3.0 for Kubernetes and Storage?

Kiran Mova

Kiran Mova