The sure-shot sign of a growing community around an OSS project is when the repository is witnessing a steady increase in GitHub issues for enhancement and feature requests (and of course..er..bugs, not to mention PRs for fixing those!), organizations are building demos around it, contributors/users pop up at community sync up calls and share feedback about how useful the project is to them, how they would like to see it shape up, and senior technology enthusiasts and developers from different organizations gladly agree to sign-up as maintainers.

.png?width=648&name=Litmus%201.2%20Release%20(2).png)

Litmus has started to see this happen, and we couldn’t be more excited and motivated. As a consequence, we decided that it makes sense to donate Litmus to the CNCF as a sandbox project to help with neutral governance, increased development/traction, and a community-driven roadmap! Especially since we believe it is one of the first Cloud Native Chaos Engineering projects out there. While we hope it does get accepted, we as a team are focused on churning out monthly releases to incorporate the capabilities the community has decided on!

In 1.2, the focus was around improving observability around chaos in the form of events, supporting newer platforms, adding and enhancing experiments in different suites & not least, improving the governance info to augment the amazing community build-up. Read on to learn more about it!

Increased Support for Chaos Events

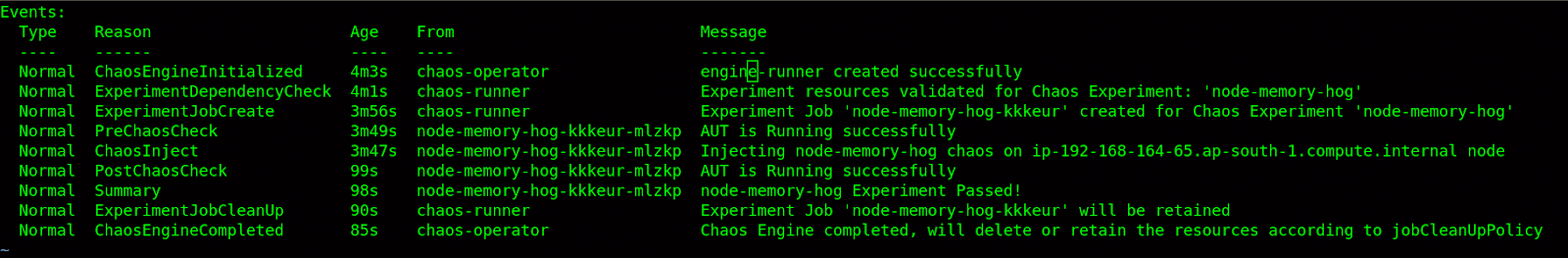

While the previous release briefly introduced Kubernetes events for creation/removal of chaos resources, 1.2 adds increased events to the chaosengine with events generated at each stage through the lifecycle of the experiment, thereby giving users a complete understanding of the happenings during execution. Considering Litmus is itself composed of different sub-components working together to perform chaos, there are various event sources (chaos-operator, chaos-runner, chaos-experiment) that generate the events. Provided below is an example of chaos events for the new node memory hog experiment.

On a lighter note, here is a tweet that illustrates this capability (i.e., events pouring in from different sources) in an alternate world !! https://twitter.com/ispeakc0de/status/1237825651169579008?s=21

Experiment Failure Reason in ChaosResult

One of the constant endeavors of the litmus team has been to improve the result/reporting infra for the chaos experiment, with different issues being worked at this point to ensure this. One of the first steps towards that direction is to mark out the point of failure (task) in the experiment. In this release, we have introduced an additional field .status.experimentstatus.failStep in the ChaosResult status to indicate the same. This field is patched with the name of the ansible task that has failed. This can aid with debugging the execution, especially when used with the jobCleanupPolicy set to retain. Though this enhancement in 1.2.0 is specific to experiments executed as Ansible playbooks, the techniques for achieving this in python/golang will be made clear via respective experiment templates in subsequent releases.

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosResult

metadata:

name: engine-pod-delete

labels:

chaosUID: 83c439de-6082-11ea-89a5-0cc47ab587b8

spec:

engine: engine

experiment: pod-delete

status:

experimentstatus:

phase: Completed

verdict: Fail

failStep: Checking whether application pods are in running stateIntegration with Chaostoolkit

Litmus was built with the principles of extensibility in mind and is already capable of using other popular chaos tools such as Pumba & Powerfulseal. In this release, a new category of charts has been introduced based on integration with another popular chaos tool called chaostoolkit, which was submitted by the amazing folks from Intuit. The experiments are python scripts with their steady-state-checks, which in turn invoke the chaostoolkit libraries to inject the chaos. Needless to say, these scripts are containerized & Litmus orchestrates these experiments via the standard ChaosEngine/ChaosExperiment interfaces to achieve the desired benefits of orchestration as well as reuse of existing chaostoolkit-based scripts.

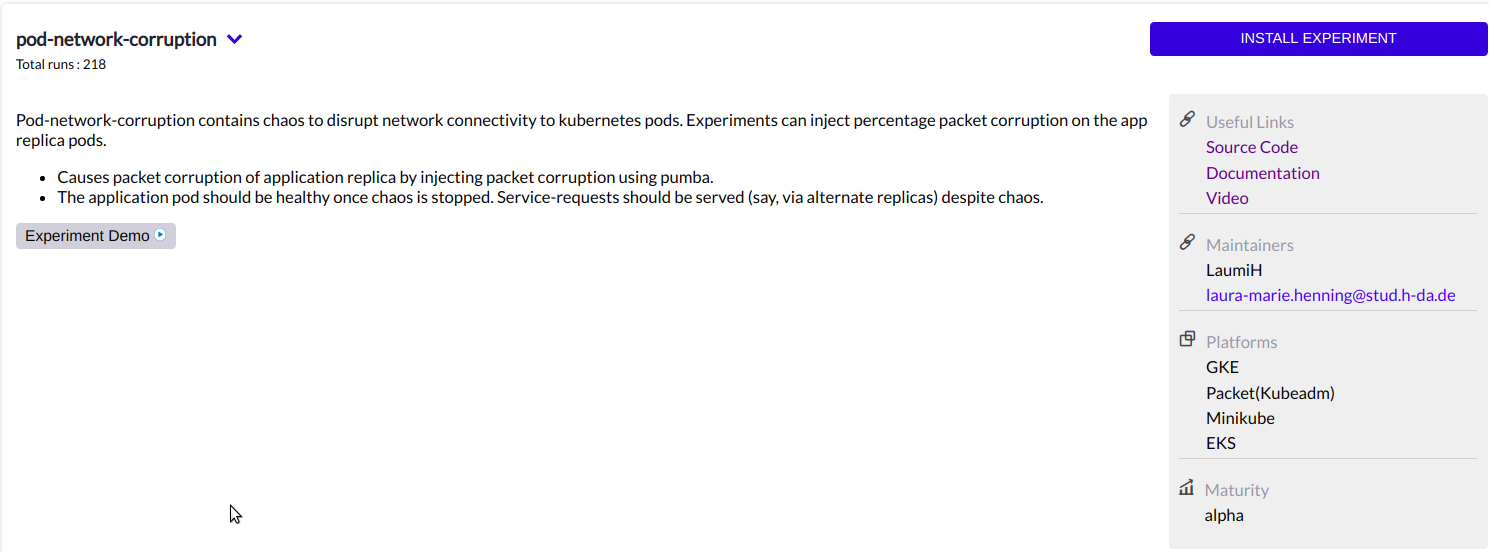

Support for Chaos on Amazon EKS platform

One of the criteria mentioned in the experiment maturity guidelines we formed sometime back was to support chaos on multiple Kubernetes platforms, which includes EKS. In this release, we have certified all experiments in the Generic Chaos suite on EKS. Experiments belonging to other suites are being tested as of writing this blog & upcoming experiments shall be too!

Support for RAMP time in an experiment

One of the common features of any “testing” or “experimentation” toolset is the ability to provide a “warm-up” and “soak” time before and after the test/experiment itself, thereby enabling the system to make a better decision on the steady-state and avoid transient statuses. This is especially true when dealing with the network, storage, or other infra components. In 1.2.0, we have introduced an option to specify a RAMP_TIME in the experiment via an ENV variable (typically provided as an overriding value in the chaosengine, with the default set to no wait / zero seconds) for precisely this purpose.

experiments:

- name: node-memory-hog

spec:

components:

env:

# set chaos duration (in sec) as desired

- name: TOTAL_CHAOS_DURATION

value: '120'

# time to wait before injecting chaos and before post-chaos steady state checks

- name: RAMP_TIME

value: '60'

## specify the size as percent of total available memory (in percentage %)

## default value 90%

- name: MEMORY_PERCENTAGE

value: '90'

# It supports GKE and EKS Platform

# GKE is the default Platform

- name: PLATFORM

value: 'GKE'New Chaos Experiments On the Block

As with every release, we have some new experiments added to the chaos suites this time too.

- OpenEBS Pool Disk Loss (OpenEBS): This experiment is supported on GKE/AWS and reuses the disk loss chaoslib. On a different note, we are even recommending the OpenEBS users to try out the LitmusChaos experiments at the various stages of their day-0/day-1 journey to verify the resiliency of the OpenEBS deployments.

- Node Memory Hog (Generic): Created on popular request, this experiment accepts a memory consumption percentage (of the node memory) and hogs the same, leading to a resource crunch on the node, which may cause forced evictions of the application pods. This experiment uses stress-ng (an improvement over the standard stress library in Linux), which allows us to expand the scope of resource chaos widely. Trust us. we are on it!

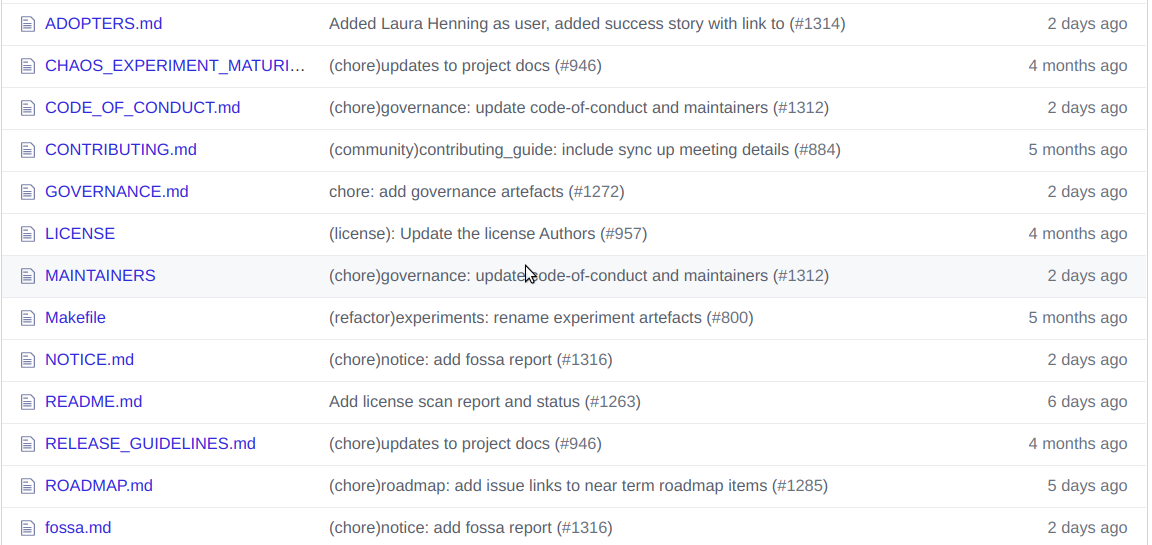

Governance - What is that?

While Litmus has been a project following the standard OSS principles, there were some crucial missing artifacts in the Github repositories around Governance. Governance, in layman terms, pertains to clearly calling out details such as who the maintainers are, what the process is for becoming one, what is the near-term/long-term roadmap and how it is decided, what are the licenses involved, what kind of code/build dependencies exist, who are the initial adopters/users, how are releases carried out, etc., With this release, these details have been incorporated into the Litmus repo to aid the growing community with essential information on making the right decisions around contributions/adoptions.

Other Notable Improvements

Some of the other improvements that made it into 1.2 include the following:

- The jobCleanupPolicy (ChaosEngine) is now extended to the chaos-runner pod, with the runner & monitor pods automatically removed (along with the experiment pod) if the policy is set to delete.

- The chaos-runner properties override capability in the ChaosEngine (under .spec.components.runner) is now extended to imagePullPolicy & command/args attributes as well to aid debug/development.

- The experiments now run with a reduced resource footprint (in terms of pods) in cases where node chaos is performed, by replacing the use of daemonsets with jobs

- Introduces an nfs liveness client to aid with NFS storage provisioner chaos

- A cleaner documentation website with no stale (pre 1.0) data!!

For a full list of enhancements/fixes, please refer to the release notes.

Conclusion

As with 1.1, one of the highlights of this release was that most of the items picked came purely from community requests. And there are many more to be taken up! As with any project picking up momentum, there is a considerable amount of prioritization involved, which we aim to accomplish during the monthly community sync up calls. There are daily public standups conducted at 16:00 IST, which the community can participate in to catch up on how things are progressing and also to seek info/help on any contributions one may be working on. And don’t hesitate to reach out to us on our increasingly chatty Slack Channel on the Kubernetes workspace!! Some of the items coming out soon (backlogs generated from previous sync-ups) include:

- Support for AdminMode of chaos, with all chaos resources centralized in a single namespace & application chaos controlled via opt-in annotation model

- Improved Chaos Abort functionality for cases where chaos processes are started on target containers

- OpenEBS NFS chaos experiments

- Improved experiment logging via better context/task descriptions

- Improvements to chaos metrics visualization

Last but not the least, a hearty welcome to our new maintainers Jayesh Kumar (@k8s-dev) & Sumit Nagal (@sumitnagal) and a big shoutout to our awesome contributors & users: David Gildeh (@dgildeh), Laura Henning (@laumiH) & Christopher Pitstick (@cpistick-argo).

MayaData launching ChaosNative for LitmusChaos and more

Evan Powell

Evan Powell

Achieving cross zone HA in GKE

Abhishek Raj

Abhishek Raj

Predefined Workflow with Kubera Chaos

Oum Kale

Oum Kale