Get Started with LitmusChaos

In this blog, I will be talking about setting up a quick demo environment for Litmus. Before jumping in, let's do a quick recap on Litmus. Litmus is a framework for practicing chaos engineering in cloud-native environments. Litmus provides a chaos operator, a large set of chaos experiments on its hub, detailed documentation, and a friendly community. Litmus is very easy to use, but a quick demo environment to install Litmus, run experiments, and learn chaos engineering on Kubernetes will be, of course, helpful

Litmus Demo Environment

The Litmus Demo is a quick way to introduce yourself to the world of Cloud-Native Chaos Engineering. It helps you to familiarize with running LitmusChaos experiments in a realistic application environment running multiple services on a Kubernetes cluster. By following the instructions in this blog, you will be able to create a cluster, install a sample application that will be subjected to chaos, build the chaos infrastructure & run the chaos experiments, all in a matter of minutes.

When, as a community, we were pondering about a quick, lightweight environment to achieve this automated “Demo” setup, we just couldn’t look beyond KinD (cluster infra) & the immensely popular Sock-Shop (sample microservices application). However, the demo script also provides a more “extensive” platform in GKE, in case you want to explore the larger suite of experiments.

As you may have guessed, the idea is to get down to experiencing chaos injection without brooding over documentation & copy-pasting multiple Kubernetes manifests. This demo is designed to help get your hands dirty on injecting failures using a chaos framework & finding out what happens and not so much about inculcating in you, in-depth knowledge of standard practices around chaos engineering. We are hoping this will get you there eventually!

LitmusChaos PreRequisites

Docker, Kubectl & Python3.7+ (with the PyYaml package) are all you need for running the KinD platform based chaos demo. If GKE is your platform choice, you may need to configure gcloud on your workstation (or test-harness machines, if you are old fashioned!).

Know Your Cluster

As described earlier, this demo environment supports different platforms (KinD, GKE).

- KinD: A 3 node KinD cluster pre-installed with the sock-shop demo application, litmus chaos CRDs, operator, and a minimal set of prebuilt chaos experiment CRs is setup.

- GKE: A 3 node GKE cluster pre-installed with the sock-shop demo application, litmus chaos CRDs, operator, and the full generic Kubernetes chaos experiment suite is setup.

NOTE: The support for other platforms like AWS will be added very soon.

Getting started:

To get started with any of the above platforms, we will follow the following steps.

1. Clone Litmus demo repository in your system.

This command will clone the master branch of the litmus demo repository in your system.

git clone https://github.com/litmuschaos/litmus-demo.git2. Check out the available options on the demo script

cd litmus-demo

./manage.py -hOutput:

usage: manage.py [-h] {start,test,list,stop} ...

Spin up a Demo Environment on Kubernetes.

positional arguments:

{start,test,list,stop}

start Start a Cluster with the demo environment deployed.

test Run Litmus ChaosEngine Experiments inside litmus demo

environment.

list List all available Litmus ChaosEngine Experiments

available to run.

stop Shutdown the Cluster with the demo environment

deployed.

optional arguments:

-h, --help show this help message and exitSo, we can see that the available arguments are start, test, list, and stop with their usage.

3. Check the available experiments on the desired platform

You can also view the experiments supported by the platform, as of today (The folks in the litmus community are busy extending the platform interoperability for the chaos experiment, so stay tuned!).

For KinD platform

./manage.py list --platform kindNOTE: The default value of --platform is kind so ./manage.py list will also give the same output.

Available Litmus Chaos Experiments:

1. pod-delete

2. container-kill

3. node-cpu-hog

4. node-memory-hogFor GKE platform

./manage.py list --platform GKEOUTPUT:-------------

Available Litmus Chaos Experiments:

1. container-kill

2. disk-fill

3. node-cpu-hog

4. node-memory-hog

5. pod-cpu-hog

6. pod-delete

7. pod-memory-hog

8. pod-network-corruption

9. pod-network-latency

10. pod-network-loss4. Installing Demo Environment

Install the demo environment using one of the platforms with start argument:

KinD Cluster

./manage.py start --platform kindOnce done, wait for all the pods to get in a ready state. You can monitor this using.

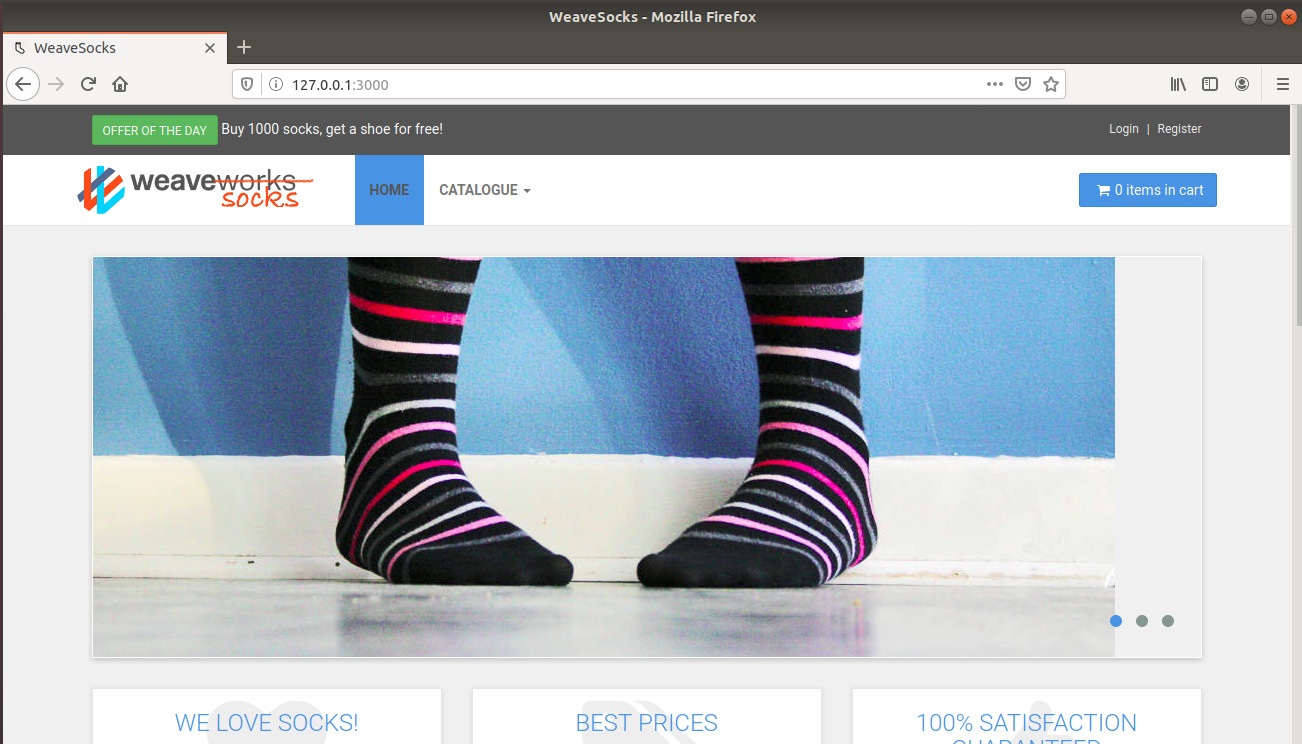

watch kubectl get pods --all-namespacesNow when all pods come into the Running state, we can access the sock-shop application through web-ui, which will help us visualize the impact of chaos on the application and whether the application persists after chaos injections. Follow the given steps to access through web-ui.

- Get the port of frontend deployment

kubectl get deploy front-end -n sock-shop -o jsonpath='{.spec.template.spec.containers[?(@.name == "front-end")].ports[0].containerPort}'OUTPUT: --------------

8079 - Perform port forwarding on the port obtained above

kubectl port-forward deploy/front-end -n sock-shop 3000:8079OUTPUT:-------------

Forwarding from 127.0.0.1:3000 -> 8079 Forwarding from [::1]:3000 -> 8079

Copy the IP to a web browser and get the web-ui of sock-shop using kind cluster.

- For GKE Cluster

./manage.py start --platform GKE

Once done, you will get an output containing Ingress Details:

Ingress Details:

** RUNNING: kubectl get ingress basic-ingress --namespace=sock-shop

NAME HOSTS ADDRESS PORTS AGE

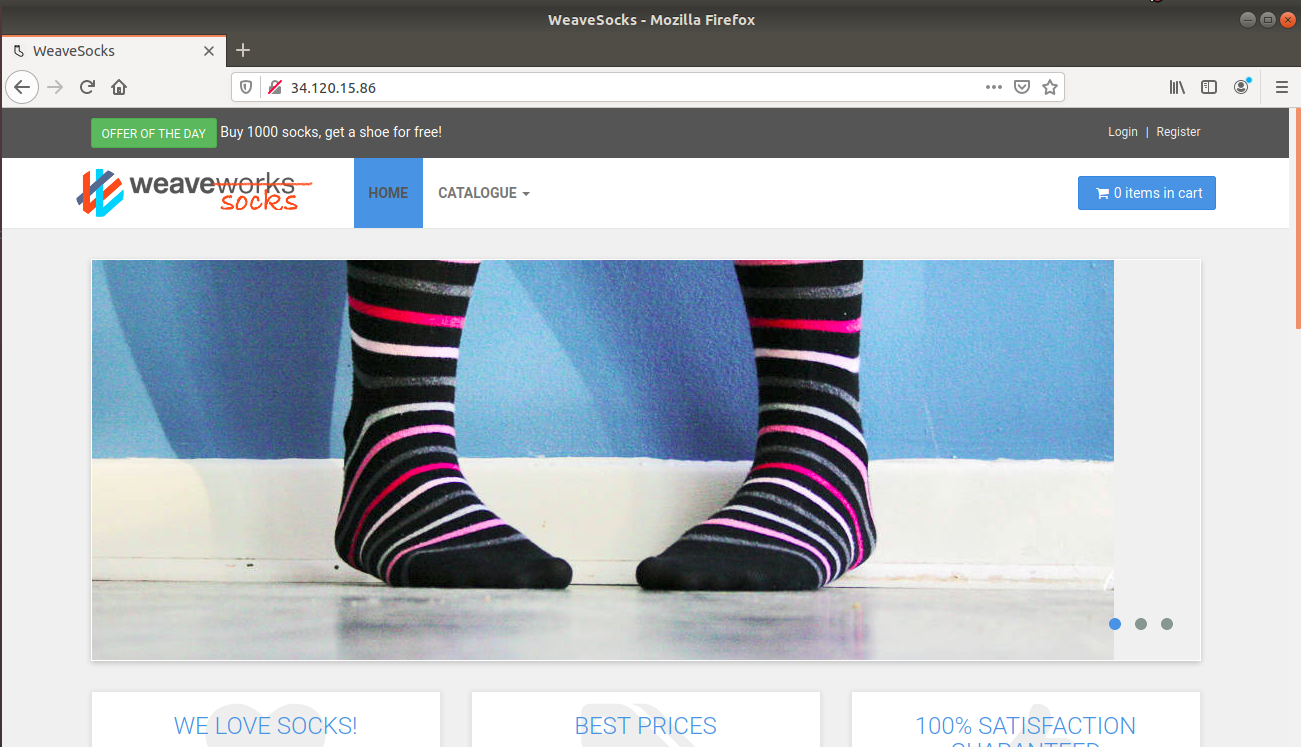

basic-ingress * 34.120.15.86 80 95s

You can access the web application in a few minutes at http://34.120.15.86After waiting for a few minutes, you can check the given URL (here http://34.120.15.86) it will take you to the sock-shop catalog UI on the localhost browser. This makes sure that the sock-shop application is deployed in the cluster-infra. The sock-shop catalog UI will look like:

To check all pods are ready, run the following command and check the status of all pods which should be in Running state.

watch kubectl get pods --all-namespaces5. Running Chaos in Demo Environment

We can run all the chaos experiments we get from ./manage.py list, or we can also run selective experiments that only we want to perform using ./manage test as shown:

For running all experiments

./manage.py test --platform <platform-name>For running selectve experiment

./manage.py test --platform <platform-name> --test <test-name>Follow step 3 to get the available test-name.

Example: For running the pod-delete experiment.

**./manage.py test --platform kind --test pod-delete **Take this opportunity to view what happens as the experiment runs its course. Pods may be killed; containers may be restarted, the resource usage may spike up - all impacting the application in its own unique way. In a typical run of the chaos experiment, a hypothesis is built around each of these failures. While we discuss this in detail in upcoming blogs, the endeavor in our case is to find out if the sock-shop app continues to stay available across these failures !!

The experiment results (Pass/Fail) are derived based on the simple criteria of app availability post chaos & are summarized on the console once the execution completes.

Get more details about the flags used to configure and run the chaos tests please refer to the param tables in the test section.

6. Deleting Cluster / Cluster Clean up

To shut down and destroy the cluster when you're finished, run the following commands:

KinD cluster

./manage.py --platform kind stopGKE cluster

./manage.py --platform GKE stop --project {GC_PROJECT}Conclusion

Did you get a taste of chaos in a Kubernetes environment? Did you end up killing different microservices to gauge the impact? Are you inclined to understand what happened when the failures were injected & what options the chaos framework provides to control & assess these? Did you make a mental map of how your microservices might behave if the same failures were injected on them? And about how to mitigate the impact of these failures?

If you asked yourself these questions, then the purpose of this litmus-demo is met. It also means you are onwards and upwards in your chaos engineering journey!

Are you an SRE or a Kubernetes enthusiast? Does Chaos Engineering excite you?

Join Our Community On Slack for detailed discussion, feedback & regular updates On Chaos Engineering For Kubernetes.

(#litmus channel on the Kubernetes workspace)

Check out the Litmus Chaos GitHub repo and do share your feedback.

Managing Ephemeral Storage on Kubernetes with OpenEBS

Kiran Mova

Kiran Mova

Why OpenEBS 3.0 for Kubernetes and Storage?

Kiran Mova

Kiran Mova

Deploy PostgreSQL On Kubernetes Using OpenEBS LocalPV

Murat Karslioglu

Murat Karslioglu